|

|

|

发布时间: 2022-06-16 |

视觉理解与计算成像 |

|

|

|

收稿日期: 2022-01-15; 修回日期: 2022-03-23; 预印本日期: 2022-03-30

作者简介:

顿雄,1986年生,男,助理研究员, 主要研究方向为光谱成像、计算成像、微纳加工。E-mail: dunx@tongji.edu.cn

付强,1985年生,男,Research Scientist, 主要研究方向为光谱成像、计算成像、微纳光学。E-mail: qiang.fu@kaust.edu.sa 李浩天,1994年生,男,硕士,主要研究方向为光场成像在工业领域的应用。E-mail:lihaotiansky@vommatec.com 孙天成,1995年生,男,Research Scientist, 主要研究方向为渲染以及逆渲染的结合、计算摄影。E-mail: kevin.kingo0627@gmail.com 王建,1987年生,男, Research Scientist, 主要研究方向为计算成像、三维传感。E-mail: jwang4@snapchat.com 孙启霖,1992年生,通信作者,男,助理教授。主要研究方向为计算成像、光学、深度/超快速成像、基于物理学的渲染与仿真、深度学习、优化。E-mail: sunqilin@cuhk.edu.cn *通信作者: 孙启霖 sunqilin@cuhk.edu.cn

中图法分类号: TB811.3

文献标识码: A

文章编号: 1006-8961(2022)06-1840-37

|

摘要

计算成像是融合光学硬件、图像传感器和算法软件于一体的新一代成像技术,突破了传统成像技术信息获取深度(高动态范围、低照度)、广度(光谱、光场、3维)的瓶颈。本文以计算成像的新设计方法、新算法和应用场景为主线,通过综合国内外文献和相关报道来梳理该领域的主要进展。从端到端光学算法联合设计、高动态范围成像、光场成像、光谱成像、无透镜成像、低照度成像、3维成像和计算摄影等研究方向,重点论述计算成像领域的发展现状、前沿动态、热点问题和趋势。端到端光学算法联合设计包括了可微的衍射光学模型、折射光学模型以及基于可微光线追踪的复杂透镜的模型。高动态范围光学成像从原理到光学调制、多次曝光、多传感器融合以及算法等层面阐述不同方法的优点与缺点以及产业应用。光场成像阐述了基于光场的3维重建技术在超分辨、深度估计和3维尺寸测量等方面国内外的研究进展和产业应用,以及光场在粒子测速及3维火焰重构领域的研究进展。光谱成像阐述了当前多通道滤光片,基于深度学习和波长响应曲线求逆问题,以及衍射光栅、多路复用和超表面等优化实现高光谱的获取。无透镜成像包括平面光学元件的设计和优化,以及图像的高质量重建算法。低照度成像包括低照度情况下基于单帧、多帧、闪光灯和新型传感器的图像噪声去除等。3维成像主要包括针对基于主动方法的深度获取的困难的最新的解决方案,这些困难包括强的环境光干扰(如太阳光)、强的非直接光干扰(如凹面的互反射、雾天的散射)等。计算摄影学是计算成像的一个分支学科,从传统摄影学发展而来,更侧重于使用数字计算的方式进行图像拍摄。在光学镜片的物理尺寸、图像质量受限的情况下,如何使用合理的计算资源,绘制出用户最满意的图像是其主要研究和应用方向。

关键词

端到端成像; 高动态范围成像; 光场成像; 光谱成像; 无透镜成像; 低照度成像; 主动3维成像; 计算摄影

Abstract

Computational imaging breaks the limitation of traditional digital imaging to acquire the information deeper (e.g., high dynamic range imaging and low light imaging) and broader (e.g., spectrum, light field, and 3D imaging). Driven by industry, especially mobile phone manufacturer medical and automotive, computational imaging has become ubiquitous in our daily lives and plays a critical role in accelerating the revolution of industry. It is a new imaging technique that combines illumination, optics, image sensors, and post-processing algorithms. This review takes the latest methods, algorithms, and applications as the mainline and reports the state-of-the-art progress by jointly analyzing the articles and reports at home and aboard. This review covers the topics of end-to-end optics and algorithms design, high dynamic range imaging, light-field imaging, spectrum imaging, lensless imaging, low light imaging, 3D imaging, and computational photography. It focuses on the development status, frontier dynamics, hot issues, and trends in each computational imaging topic. The camera systems have long-term been designed in separated steps: experience-driven lens design followed by costume designed post-processing. Such a general-propose approach achieved success in the past but left the question open for specific tasks and the best compromise among optics, post-processing, and costs. Recent advances aim to build the gap in an end-to-end fashion. To realize the joint optics and algorithms designing, different differentiable optics models have been realized step by step, including the differentiable diffractive optics model, the differentiable refractive optics, and the differentiable complex lens model based on differentiable ray-tracing. Beyond the goal of capturing a sharp and clear image on the sensor, it offers enormous design flexibility that can not only find a compromise between optics and post-processing, but also open up the design space for optical encoding. The end-to-end camera design offers competitive alternatives to modern optics and camera system design. High dynamic range (HDR) imaging has become a commodity imaging technique as evidenced by its applications across many domains, including mobile consumer photography, robotics, drones, surveillance, content capture for display, driver assistance systems, and autonomous driving. This review analyzes the advantages, disadvantages, and industrial applications through analyzing a series of HDR imaging techniques, including optical modulation, multi-exposure, multi-sensor fusion, and post-processing algorithms. Conventional cameras do not record most of the information about the light distribution entering from the world. Light-field imaging records the full 4D light field measuring the amount of light traveling along each ray that intersects the sensor. This review reports how the light field is applied to super-resolution, depth estimation, 3D measurement, and so on and analyzes the state-of-the-art method and industrial application. It also reports the research progress and industrial application in particle image velocimetry and 3D flame imaging. Spectral imaging technique has been used widely and successfully in resource assessment, environmental monitoring, disaster warning, and other remote sensing domains. Spectral image data can be described as a three-dimensional cube. This imaging technique involves capturing the spectrum for each pixel in an image; As a result, the digital images produce detailed characterizations of the scene or object. This review explains multiple methods to acquire spectrum volume data, including the current multi-channel filter, solving the wavelength response curve inversely based on deep learning, diffraction grating, multiplexing, metasurface, and other optimizations to achieve hyper-spectrum acquisition. Lensless imaging eliminates the need for geometric isomorphism between a scene and an image while constructing compact and lightweight imaging systems. It has been applied to bright-field imaging, cytometry, holography, phase recovery, fluorescence, and the quantitative sensing of specific sample properties derived from such images. However, the low reconstructed signal-to-noise ratio makes it an unsolved challenging inverse problem. This review reports the recent progress in designing and optimizing planar optical elements and high-quality image reconstruction algorithms combined with specific applications. Imaging under a low light illumination will be affected by Poisson noise, which becomes increasingly strong as the power of the light source decreases. In the meantime, a series of visual degradation like decreased visibility, intensive noise, and biased color will occur. This review analyzes the challenges of low light imaging and conclude the corresponding solutions, including the noise removal methods of single/multi-frame, flash, and new sensors to deal with the conditions when the sensor exposure to low light. Shape acquisition of three-dimensional objects plays a vital role for various real-world applications, including automotive, machine vision, reverse engineering, industrial inspections, and medical imaging. This review reports the latest active solutions which have been widely applied, including structured light, direct time-of-flight, and indirect time-of-flight. It also notes the difficulties like ambient light (e.g., sunlight), indirect inference (e.g., the mutual reflection of the concave surface, scattering of foggy) of depth acquisition based on those active methods. The use of computation methods in photography refers to digital image capture and processing techniques that use digital calculation instead of optical processes. It can not only improve the camera ability but also add more new features that were not possible at all with traditional film-based photography. Computational photography is an essential branch of computation imaging developed from traditional photography — however, computational photography emphasizes taking a photograph digitally. Limited by the physical size and image quality, computational photography focuses on reasonably arranging the computational resources and showing the high-quality image that pleasures the customer's feeling. As 90 percent of the information transmitted to our human brain is visual, the imaging system plays a vital role for most future intelligence systems. Computational imaging drastically releases human information acquisition ability in no matter depth or scope. For new techniques like metaverse, computational imaging offers a general input tool to collect multi-dimensional visual information for rebuilding the virtual world. This review covers key technological developments, applications, insights, and challenges over the recent years and examines current trends to predict future capabilities.

Key words

end-to-end camera design; high dynamic range imaging; light-field imaging; spectral imaging; lensless imaging; low light imaging; active 3D imaging; computational photography

0 引言

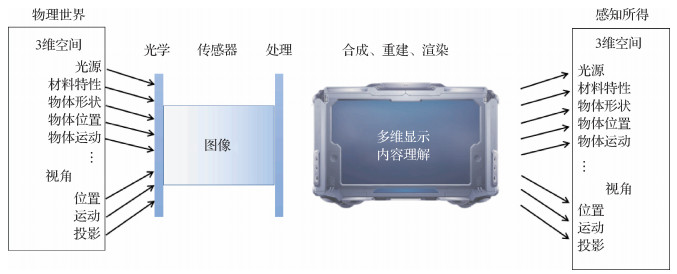

物理空间中包含有多种维度的信息,例如光源光谱、反射光谱、偏振态、3维形态、光线角度和材料性质等。而成像系统最终成得的像取决于光源光谱、光源位置、物体表面材料的光学性质如双向投射/散射/反射分布函数以及物体3维形态等。然而,传统的光学成像依赖于以经验驱动的光学设计,旨在优化点扩散函数(point spread function, PSF)、调制传递函数(modulation transfer function,MTF)等指标,目的是使得在探测器上获得更清晰的图像、更真实的色彩。通常“所见即所得”,多维信息感知能力不足。随着光学、新型光电器件、算法和计算资源的发展,可将它们融为一体地计算成像技术,逐步解放了人们对物理空间中多维度信息感知的能力。与此同时,随着显示技术的发展,特别是3D甚至6D电影,虚拟现实(vitual reality,VR)/增强现实(augmented reality,AR)技术的发展,给多维度信息提供了展示平台。以目前对物理尺度限制严格的手机为例,手机厂商正与学术界紧密结合。算法层面如高动态范围成像、低照度增强、色彩优化、去马赛克、噪声去除甚至是重打光逐步应用于手机中,除去传统的图像处理流程,神经网络边缘计算在手机中日益成熟。光学层面如通过非球面乃至自由曲面透镜优化像差,通过优化拜尔(Bayer)滤光片平衡进光量和色彩。

本文围绕端到端光学算法联合设计、高动态范围成像、光场成像、光谱成像、无透镜成像、偏振成像、低照度成像、主动3维成像和计算摄影等具体实例全面阐述当前计算成像发展现状、前沿动态,热点问题、发展趋势和应用指导。任务框架如图 1所示。

端到端光学算法联合设计(end-to-end camera design)是近年来新兴起的热点分支,对一个成像系统而言,通过突破光学设计和图像后处理之间的壁垒,找到光学和算法部分在硬件成本、加工可行性、体积重量、成像质量、算法复杂度以及特殊功能间的最佳折中,从而实现在设计要求下的最优方案。端到端光学算法联合设计的突破为手机厂商、工业、车载、空天探测和国防等领域提供了简单化的全新解决方案,在降低光学设计对人员经验依赖的同时,将图像后处理同时自动优化,为相机的设计提供了更多的自由度,也为光学系统轻量化和实现特殊功能提供了全新的解决思路。

高动态范围成像(high dynamic range imaging,HDR)在计算图形学与摄影中,是用来实现比普通数位图像技术更大曝光动态范围(最亮和最暗细节的比率)的技术。摄影中,通常用曝光值(exposure value,EV)的差来描述动态范围,1EV对应两倍的曝光比例,并通常称为1档(1 stops)。自然场景最大动态范围约22档,城市夜景可达约40档,人眼可以捕捉约10~14档的动态范围。高动态范围成像一般指动态范围大于13档或8 000 ∶1(78 dB),主要包括获取、处理、存储和显示等环节。高动态范围成像旨在获取更亮和更暗处细节,从而带来更丰富的信息、更震撼的视觉冲击力。高动态范围成像不仅是目前手机相机核心竞争力之一,也是工业、车载相机的基本要求。

光场成像(light field imaging,LFI)能够同时记录光线的空间位置和角度信息,是3维测量的一种新方法,逐渐成为一种新兴的非接触式测量技术。自摄影发明以来,图像捕捉就涉及在场景的2维投影中获取信息。然而,光场不仅提供2维投影,还增加了另一个维度,即到达该投影的光线的角度。光场具有关于光阵列方向和场景2维投影的信息,并且可以实现不同的功能。例如,可以将投影移动到不同的焦距,这使用户能够在采集后自由地重新聚焦图像。此外,还可以更改捕获场景的视角,已逐渐应用于工业、虚拟现实、生命科学和3维流动测试等领域,帮助快速获得真实的光场信息和复杂3维空间信息。

光谱成像(spectrum imaging)由传统彩色成像技术发展而来,能够获取目标物体的光谱信息。每个物体都有自己独特的光谱特征,就像每个人拥有不同的指纹一样,光谱也因此被视为目标识别的“指纹”信息。通过获取目标物体在连续窄波段内的光谱图像,组成空间维度和光谱维度的数据立方体信息,可以极大地增强目标识别和分析能力。光谱成像可作为科学研究、工程应用的有力工具,已经广泛应用于军事、工业和民用等诸多领域,对促进社会经济发展和保障国家安全具有重要作用。例如,光谱成像对河流、沙土、植被和岩矿等地物都有很好的识别效果,因此在精准农业、环境监控、资源勘查和食品安全等诸多方面都有重要应用。光谱成像还有望用于手机、自动驾驶汽车等终端。当前,光谱成像已成为计算机视觉和图形学研究的热点方向之一。

无透镜成像(lensless imaging)技术为进一步压缩成像系统的尺寸提供了一种全新的思路(Boominathan等,2022)。传统的成像系统依赖点对点的成像模式,其系统极限尺寸仍受限于透镜的焦距、孔径和视场等核心指标。无透镜成像摒弃了传统透镜中点对点的映射模式,将物理空间的点投影为像空间的特定图案,不同物点在像面叠加编码,形成一种人眼无法识别,但计算算法可以通过解码复原图像信息。其在紧凑性方面具有极强的竞争力,而且随着解码算法的发展,其成像分辨率也得到大大提升。因此,在可穿戴相机、便携式显微镜、内窥镜和物联网等应用领域极具发展潜力。另外,其独特的光学加密功能,能够对目标中敏感的生物识别特征进行有效保护,在隐私保护的人工智能成像方面也具有重要意义。

低光照成像(low light imaging)也是计算摄影里的研究热点一。手机摄影已经成为人们用来记录生活的常用方式之一,手机夜景模式也成为各大手机厂商争夺的技术要点。不同手机的相机在白天的强光环境下拍照差异并不明显,然而在夜晚弱光情况下则差距明显。其原因是成像依赖于镜头收集物体发出的光子,且传感器在光电转换、增益和模数转换等一系列过程中会有不可避免的噪声;白天光线充足,信号的信噪比高,成像质量很高;晚上光线微弱,信号的信噪比下降数个数量级,成像质量低;部分手机搭载使用计算摄影算法的夜景模式,比如基于单帧、多帧、RYYB(red,yellow,yellow,blue)阵列等的去噪,有效地提高了照片的质量。但目前依旧有很大的提升空间。

主动3维成像(active 3D imaging)以获取物体或场景的点云为目的,被动方法以双目立体匹配为代表,但难以解决无纹理区域和有重复纹理区域的深度。主动光方法一般更为鲁棒,能够在暗处工作,且能够得到稠密的、精确的点云。主动光方法根据使用的光的性质可分为结构光、基于光速如TOF(time-of-fligt),包括连续波TOF(indirect TOF, iTOF)和直接TOF(direct TOF, dTOF),和基于光的波的性质如干涉仪,其中前两种方法的主动3维成像已广泛使用在人们的日常生活中。虽然主动方法通过打光的方式提高了准确性,但也存在由于环境光、多路径干扰引起的问题,这些都在近些年的研究过程中有了很大的改进。

计算摄影学(computational photography)是计算成像的一个分支学科,从传统摄影学发展而来。传统摄影学主要着眼于使用光学器件更好地进行成像,如佳能、索尼等相机厂商对于镜头的研究;与之相比,计算摄影学则更侧重于使用数字计算的方式进行图像拍摄。随着移动端设备计算能力的迅速发展,手机摄影逐渐成为计算摄影学研究的主要方向:在光学镜片的物理尺寸、成像质量受限的情况下,如何使用合理的计算资源,绘制出用户最满意的图像。计算摄影学在近年得到了长足的发展,其研究问题的范围也所有扩展,如夜空摄影、人脸重光照和照片自动美化等。其中重点介绍:自动白平衡、自动对焦、人工景深模拟以及连拍摄影算法。篇幅所限,本文仅介绍目标为还原拍摄真实场景的真实信息的相关研究。

1 国际研究现状

1.1 端到端光学算法联合设计

相机的设计在图像质量之间进行了复杂的权衡(例如,锐度、对比度和色彩保真度), 并对其实用性、成本、外形尺寸和重量等因素进行了综合考量。一般的,高质量成像系统需要多个光学元件来消除各种像差。传统的设计过程通常是依赖如ZEMAX和Code V这样的光学设计辅助工具,基于评价函数对图像区域、景深或缩放范围等进行对点扩散函数综合设计。其设计过程需要大量的光学设计经验,对点扩散函数的设计通常忽略了后续图像处理操作、具体应用场景,或者需要在图像中编码额外信息。因此,如何整体优化成像系统、降低对研发人员依赖、在成本与效果间寻找最佳折中以及针对特定任务为光学和算法找到联合最优解逐渐成为研究热点。

光学、算法联合设计(Peng等,2019;Sun等,2018),数据驱动的端到端设计(Sitzmann等,2018)正在构建起光学设计和图像后处理之间的桥梁。光学算法联合设计已经在深度估计(Haim等,2018;Chang和Wetzstein,2019;Wu等,2019b)、大视场成像(Peng等,2019)、大景深成像(Chang和Wetzstein,2019)、对SPAD(single photo avalanche diode)传感器最优化光学采样(Sun等,2020b)、高动态范围成像(Sun等,2020a;Metzler等,2020)、高光谱和深度成像(Baek等,2021)取得了巨大的成功。Chang等人(2018)将端到端光学算法联合设计应用到了图像分类。然而,其可微光学模型仍局限在单层可微、傍轴近似以及单种光学材料的衍射光学元件(diffractive optical element,DOE),极大地限制了其设计空间。最近,基于神经网络模拟(Tseng等,2021)、可微光线追踪模拟点扩散函数(Halé等,2021)的复杂透镜的端到端设计方法,以及基于可微光线追踪、跳过点扩散函数直接构建光学参数与最终图像之间的可微关系(Sun等,2021b;Sum, 2021c)将端到端相机设计推向了新的高度。

1.2 高动态范围成像

1.2.1 高动态范围成像原理

高动态范围成像数据获取主要有6种方法,对图像传感器本身而言,其动态范围(dynamic range, DR)可表示为

| $ D R=20 \lg \frac{I_{\max }}{I_{\min }}=20 \lg \frac{q Q_{\mathrm{sat}} / t_{\mathrm{int}}-i_{\mathrm{dc}}}{q / t_{\mathrm{int}} \sqrt{i_{\mathrm{dc}} t_{\mathrm{int}} / q+\sigma_{r}^{2}}} $ |

式中,

1.2.2 图像传感器中的HDR成像技术与产业化

目前,国际主流传感器厂商如索尼、三星、豪威、安森美等均具有HDR传感器产品,通常动态范围可达120 dB,特殊模式下可达140 dB。其主要已量产方案有BME(binned multiplexed exposure)隔行长短曝光方案、SEM(spatially multiplexed exposure)棋盘格排列长短曝光方案(Nayar和Mitsunaga,2000;Hanwell等,2020;McElvain等,2021)、QBC(quad bayer coding)的四子像素方案(Gluskin,2020;Okawa等,2019;Jiang等2021)、DOL(digital overlap)/交错HDR(staggered HDR)长短曝光准同时输出方案、DCG(dual conversion gain)HDR对同一像素使用两路信号增益、分离像素(split/sub pixel)等。

1.2.3 基于光强调制的HDR成像技术

基于光强调制的HDR成像技术通常包括静态光强调制和动态光强调制技术。静态的基于滤光片衰减调制,包括使用3×3中性密度滤光片(Manakov等,2013)从而实现18挡(108 dB)的动态范围,2×2块衰减多次曝光(Suda等,2021)和上文所述BME,SEM,QBC等方案。Alghamdi等人(2021)通过将深度学习训练出的掩膜贴至传感器表面结合恢复算法来实现HDR图像的获取。Metzler等人(2020)通过直接学习衍射光学元件调制点扩散函数来实现对高亮信息的获取,但结果仍存在较大缺陷。与此同时,Sun等人(2020a)通过低秩衍射元件分解有效解决了DOE训练难以收敛的问题,并实现了全局信息的调制,从而实现了对动态范围8档的扩展,峰值信噪比(peak signal to noise ratio, PSNR)相较以往方法提升大于7 dB,HDR-VDP2指标提升大于6个百分点。偏振相机是近年来问世的新产品,在传统的传感器基础上叠加4个角度的线偏振片。自然光在不同的偏振角度上会对应不同程度的衰减,根据这个原理可融合生成HDR图像(Wu等,2020b;Ting等;2021)。

动态光强调制技术通过使用LCD/LCOS/DMD来对空间中不同的光强加以调制(Yang等,2014;Feng等,2016, 2017;Mazhar和Riza等,2020;Niu等,2021;Martel等,2020; Martel和Wetzstein, 2021;Guan等,2021),并通过一定的反馈来对强光抑制,弱光增强,从而实现更大的动态范围。

1.2.4 基于多帧融合的HDR成像技术

多次曝光融合(Debevec和Malik, 1997, 2008;Grossberg和Nayar,2003;Hasinoff等,2010;Mann和Picard,1995;Mertens等,2009;Reinhard等,2010)在消费电子、车规级HDR传感器中取得了广泛的应用。更快速地HDR融合技术(Hasinoff等,2016;Heide等,2014;Mildenhall等,2018)实现了对低照度处理、噪声去除等, 从而提高HDR图像质量。

多次曝光融合面临着运动模糊问题的巨大挑战,故而HDR图像缝合(Kang等,2003;Khan等,2006)、光流法(Liu,2009)、块匹配(Gallo等,2009;Granados等,2013;Hu等,2013;Kalantari等,2013;Sen等,2012)和深度学习(Kalantari和Ramamoorthi, 2017, 2019)使得HDR视频变成了可能。但此类方法通常后续处理过度消耗计算资源,限制了其应用场景。

1.2.5 基于多传感器的HDR成像技术

多传感器HDR成像是利用多个探测器在不同曝光程度和增益下获取图像,通过去畸变、单应性变换等手段对齐,而后融合实现HDR。早在2001年,Aggarwal和Ahuja(2001, 2004)通过分光片和两个传感器不同程度曝光的方法来实现HDR成像。此后,多种类似HDR成像技术(Tocci等,2011;McGuire等,2007)相继提出完善。Kronander等人(2014)提出了一种统一的多传感器实时HDR重建的架构。Yamashita和Fujita(2017)采用复杂的分光系统实现了四传感器融合HDR成像。此外,芬兰JAI公司2012年推出了2-CCD的HDR相机,动态范围可达120 dB,但目前已停产。Seshadrinathan和Nestares(2017)提出了2×2阵列式HDR融合相机。类似的,Huynh等人(2019)在此基础上引入偏光片,进一步提高了HDR成像质量。目前为止,相关技术产品仅有少量型号产品问世。考虑近期多摄手机画质提升需求,多传感融合实现HDR方案具有潜在的应用可能,但仍需克服多传感器配准算法复杂度和手机算力功耗之间的矛盾,目前主流方案有立体匹配(需出厂标定)或通过光流来对齐像素(算力消耗大)。不过,随着低延时传输和云计算的发展,或深度相机在手机中的广泛应用和分辨率提升,多传感融合方案将逐步于市场推广。

1.2.6 高动态范围图像重建

HDR图像重建主要有多次曝光融合和逆色调映射以及其他调制型HDR成像重建方案。

多次曝光融合是当前消费、工业等领域主流的HDR成像方案。Goshtasby(2005)的方案局部色彩和对比度不发生变化,但图像尺寸仅适用于固定情况。Rovid等人(2007)提出基于梯度的融合方案,可从较差的多帧不同曝光图中重建较高质量HDR图像,但仅限于黑白图像。Varkonyi-Koczy等人(2008)实现了高质量HDR彩图重建,但仅限于静态图像。Mertens等人(2009)实现了更多图像的融合但处理速度较慢。Gu等人(2012)对梯度场实现了高速高效的融合,但仅限于对慢速移动的物体。Li等人(2012)通过二次优化,可在实现HDR成像的基础上增强细节获取能力,但对过曝部分处理欠佳。Song等人(2012)实现了基于概率的融合方案,表现优于以往的方法。Shen等人(2013)提出了基于感知质量测量的融合方法,实验上比以往表现更优,但判据对多图像源难以拓展。Ma等人(2015)提出了对一般图像融合的算法。Huang等人(2018a)、Huang等人(2018b)提出了一种新的彩图融合方法,比以往更好地保留图像细节,但仍无法应用于动态场景。Kinoshita等人(2018)通过曝光补偿,统计上更接近自然图像,但难以清晰地确定合适的曝光值。

逆色调映射是恢复HDR图像的一种常见方法。Larson等人(1997)提出了色调响应曲线(tonal response curve,TRC),对大部分图像有效,但仅限于对视觉效果提升。Durand和Dorsey(2000)解决了色调映射中的一些关键问题,但相比当时最新算法速度较慢。Reinhard等人(2002)提出了区域自动减淡加深系统,可很好地适应大部分HDR图像。Landis(2002)提出了全局扩展的方法,利用指数函数对SDR图像超过阈值部分处理构建HDR图像,但整体处理效果欠佳。Banterle等人(2006)应用Reinhard等人(2002)的方法来实现对SDR图像的扩展。Akyüz等人(2007)实现了简单的线性扩展,但无法有效增强过曝部分细节。Kovaleski和Oliveira(2009)可提升过曝部分的细节,但会带来色彩失真,无法真正解决过暗过亮部分细节问题。Kinoshita等人(2017)实现了高相似度低计算复杂度的方法。近年来,随着深度学习技术的发展,逆色调映射得到了长足的进步,代表性方法有Eilertsen等人(2017)提出的HDR-CNN,在过饱和区细节丢失问题得到部分解决,但动态范围扩展能力有限。紧接着,多种基于卷积神经网络(convolutional neural network, CNN)的算法相继提出(Zhang等,2017;Endo等,2017;Moriwaki等,2018;Marnerides等,2018, 2020;Lee等,2018b;Khan等,2019;Santos等,2020;Choi等,2020;Han等,2020)。最近,Sharif等人(2021)提出了两步神经网络的逆映射方法,Chen等人(2020)结合逆色调映射和去噪,Marnerides等人(2021)提出基于生成对抗网络的逆色调映射方法来填充过暗过曝部分损失的信息。此类方法繁多,近年来取得了巨大的进步,已有少量低计算复杂度算法应用于产业界。

1.2.7 高动态范围图像显示处理

通过对图像局部对比度的调整,色调映射实现了动态范围的图像到较小动态范围的显示设备上的映射。传统的色调映射算法一般可分为全局即空间不变算法(Schlick,1995;Tumblin和Turk,1999;Reinhard等,2002)、局部即变化算法(Drago等,2003;Kim等,2011;Durand和Dorsey,2002)、基于图像分割的算法(Lischinski等,2006;Ledda等,2004)、基于梯度的算子(Tumblin等,1999;Banterle等,2011;Yee和Pattanaik,2003;Krawczyk等,2005)等。传统的色调映射算法通常对参数较为敏感,但对于算力受限的场景有着较大的优势。最近的方法倾向于利用深度神经网络来实现无参数的算法来解决参数敏感和泛化的问题。Hou等人(2017)利用深层特征连续和深度图像变换训练CNN来实现色调映射。Gharbi等人(2017)利用双边网格实现了实时的增强。近年来,利用conditional-GAN一定程度获得了更佳的效果(Cao等,2020;Montulet等,2019;Panetta等,2021;Patel等,2017;Rana等,2019;Zhang等,2019a)。Vinker等人(2021)实现了非配对的数据训练的HDR色调映射。

1.3 光场成像

光场(Gershun,1939)描述了光线在3维自由空间内的分布情况,由7维全光函数定义。Gabor(1948)使用相干光干涉效应得到了世界首张全息图,包含了光线的方向和位置信息,可以视为简化的光场图像。Adelson等人(1992)搭建了光场相机模型,通过分析子图像阵列的视差初步估计其深度信息。Levoy和Hanrahan(1996)陆续完成了光场的参数化定义的简化,设计了光场相机阵列(Wilburn等,2005)来采集光场和进行渲染处理。Ng等人(2005)实现了先成像后聚焦的手持式非聚焦型光场相机。同时还表明光场相机能够产生视差,这为3D测量和3D重建应用提供了新的可能性。Levoy等人(2006, 2009)开发了光场显微镜,成功测出生物体的3维结构。Lumsdaine和Georgiev(2008, 2009)基于Ng等人(2005)的工作,提出了微透镜阵列位于主透镜的像空间焦平面后的聚焦型光场相机。光场3维成像技术在近些年快速发展,已广泛用于工程测试领域,例如粒子图像测速技术(Fahringer等,2015;Fahringer和Thurow,2018;Shi等,2016a, 2017, 2018b)、火焰3维温度场测量(Sun等,2016)、多光谱温度测量(Luan等,2021)以及物体3维形貌测量(Chen和Pan,2018;Shi等,2018a;Ding等,2019)等。本文主要针对基于光场的3维重建技术以及基于光场的粒子图像测速技术进行简要介绍。

1.3.1 基于光场成像的3维重建技术

众多学者对光场3维重建进行了相关研究(Wu等,2017b;Zhu等,2017b)。光场相机校准、光场深度估计以及光场超分辨率是光场3维重建的关键流程。

光场超分辨率大致分为空间、角度以及空间与角度超分辨率。包括基于投影模型、先验假设优化以及深度学习的方法(Cheng等,2019a):1)基于投影模型的光场图像的超分辨率:利用了不同视角光场子孔径图像之间的像素偏移信息。Lim等人(2009)利用角度数据中的冗余信息实现了光场空间超分辨率。Georgiev等人(2011)提出在投影模型中进行像素点匹配以得到亚像素偏移,将光场子图像的分辨率提高了两倍。2)基于先验假设的方法针对遮挡反光等因素做出先验模型假设,结合4D光场结构建立数学或几何模型,通过优化框架求解得到超分辨的光场图像。Rossi和Frossard(2017)采用类似多帧超分辨率的方法,提出了一种将多帧范式与图正则化器耦合起来的模型框架,并利用不同视图来增强整个光场的空间分辨率。针对噪声问题,Alain和Smolic(2018)提出了一种结合BM3D(block-matching and 3D filtering)稀疏编码的单图像超分辨率滤波器(Egiazarian和Katkovnik,2015)和用于光场图像去噪声的LFBM5D滤波器(Alain和Smolic,2017),实现了性能良好的图像空间超分辨率。3)基于深度学习的方法通过搭建神经网络对光场数据进行端到端训练,来实现光场超分辨率。Yoon等人(2015)利用深度学习的方法同时对其角度分辨率和空间分辨率上采样,得到相邻视图间的新颖视图,对子孔径图像的细节实现了增强。

随着光场理论的不断发展,通过光场图像计算场景深度信息的方法不断推出。光场合成数据集网站4D Light Benchmark(Vaish等,2004;Johannsen等,2017)进一步加速推动了光场深度算法的发展。根据获取视差信息方法的不同,一般可分为:基于子图像匹配点的算法、基于EPI(epipolar image)的方法、聚焦/散焦算法以及基于深度学习的方法。最具代表性的方法就是基于外极线(epipolar iine)图像深度估计算法(EPI)。Johannsen等人(2017)首次提出基于外极线图像的方法进行光场相机深度计算。利用结构感知机,Wanner和Goldluecke(2014)计算了外极线图像斜率,并利用快速滤波算法优化视差图。另一种较为主流的方法是由Tao等人(2013)提出的基于重聚焦和相关性原理的深度估计算法,并且通过基于增加阴影信息和遮挡信息进一步发展完善该算法(Wang等,2016a),具有较高的计算精度,并且对噪声、非朗伯体表面和遮挡情况进行了优化。Williem和Park(2016)提出了基于一种角度熵和自适应的重聚焦算法,优化了深度估计结果。韩国科学技术院(Korea Advanced Institute of Science and Technology, KAIST)的Jeon等人(2015)提出了基于相移的多视角深度算法,利用光场全部视角信息计算深度图像, 并基于机器学习优化得到了精度更高的视差图(Jeon等,2019a)。Neri等人(2015)使用基于多尺度自适应窗口变化的局部梯度算子进行视差计算,提出了RM3DE(multi-resolution depth field estimation)算法,提高了深度估计速度。Strecke等人(2017)提出了OFSY_330/DNR(occlusion-aware focal stack symmetry)算法,使用4个方向的多视角图像进行深度计算,并且在计算遮挡区域时仅考虑横纵方向视角,有助于优化计算表面法向量。随着深度学习的快速发展,Johannsen等人(2016)首次利用深度学习在多尺度场景中取得了不错的效果。Heber和Pock(2016)利用端到端的深度学习架构估计光场深度。随后,Heber等人(2017)提出了融合神经卷积网络(CNN)和变分最优方法光场深度估计算法,用于计算光场外极线图像斜率。韩国KAIST的Shin等人(2018)又基于CNN设计了一个全卷积神经网络EPINET(epipoloar geometry of light-field images network)。在真实场景上取得了良好效果,但在某些场景的泛化效果不佳。Alperovich等人(2018)使用encoder-decoder结构基于光场多视角图像同时得到了视差图和光场的光照信息。

光场相机校准用于将深度估计算法所得的视差图转换成物理空间中的尺寸,是实现光场3维测量的关键环节。由于光场相机内部光线路径的复杂性,国内外众多学者提出了不同的校准方法。Dansereau等人(2013)提出了一种包含15个参数(含3个冗余参数)的光场相机投影模型。在考虑光学畸变的情况下,得出了将每个记录的像素点位置与3D空间中光线相关联的4D本征矩阵。Bok等人(2017)利用一种新奇的线特征改进了Dansereau的15个参数投影模型,提出了仅12个参数的光场相机投影模型。利用几何投影模型来校准非聚焦型光场相机。Nousias等人(2017)提出了一种基于棋盘格中角点的聚焦型光场相机几何校准方法。O′brien等人(2018)提出了一种基于全光圆盘特征的可用于聚焦和非聚焦型光相机的校准方法。Hall等人(2018)的工作提出了一种基于多项式映射函数的光场相机校准方法,无需具体的透镜参数。其考虑了透镜畸变以提高校准精度,非平面校准的实验结果表明了该方法的灵活性。

1.3.2 基于光场成像的粒子图像测速技术

将光场成像技术应用于流场测量(light-field particle image velocimetry, LF-PIV),单目实现3维速度场测量,是3维诊断技术在受限空间中应用的一个重要进展。这方面工作主要有美国Auburn大学的Brian Thurow团队和国内上海交通大学的施圣贤团队。Brian Thurow团队设计并封装了基于正方形微透镜阵列的光场相机(Fahringer等,2015)。LF-PIV技术需要解决的关键问题之一是如何从单帧光场图像重构出流场示踪颗粒(数百纳米至几十微米)的3维图像。Fahringer和Thurow(2016)提出了基于MART和重聚焦的光场粒子图像重构算法。

Thomason等人(2014)针对光路畸变所导致的重构误差,研究了光学传播介质折射对重构粒子的位置的影响,其将拍摄光路中的折射介质分为外部介质(位于镜头和示踪粒子之间,如拍摄水洞时的壁面)和内部介质(位于主透镜和CCD阵列之间)。Fahringer和Thurow(2016)为了降低重构误差,提出了带阈值后处理的粒子重构算法,重构粒子场强的信噪比比直接重聚焦方法有所提高。国外学者已经将LF-PIV技术应用于多种复杂流动实验研究,包括脑瘤内的血液流动(Carlsohn等,2016)、扁口射流以及圆柱扰流(Seredkin等,2016, 2019)。

1.4 光谱成像

光谱成像通常分为扫描式和快照式,其中快照式因其只需单次曝光就可以获取整个数据立方体信息,在应用上更具潜力和前景,因此当前计算机视觉和图形学关于光谱成像的研究也主要集中在快照式光谱成像技术上。快照式光谱成像按照实现方式不同,可以分为分孔径光谱成像、分视场光谱成像和孔径/视场编码光谱成像等。

1.4.1 分孔径光谱成像

分孔径光谱成像是基于复眼成像原理的TOMBO(thin observation module by bound optics)发展而来(Tanida等,2001),通过将不同窄波段的滤光片阵列置于微透镜阵列上,使得每个微透镜可以独立获取一个光谱谱段图像,这种方式类似于使用很多个单独的成像镜组进行光学复制功能(Shogenji等,2004)。为了解决微透镜阵列组难于加工致使在最早的研究中只能使用单镜片,进而导致成像质量差的问题,研究者们发展了基于超表面阵列的分孔径光谱成像系统(McClung等,2020),每个孔径通道由一个基于超表面的可调滤光片和超表面双透镜组构成,由于超表面的平面结构,非常易于使用光刻技术加工和集成,而且通过将一个超表面透镜作为视场光阑,不仅有效地避免了不同透镜组之间的串扰,而且校正了场曲等单微透镜面临的轴外像差。无论是使用微透镜阵列,还是使用超透镜阵列,都不可避免地存在由于各透镜中心位置不同导致的视差,为了解决这以问题,IMC公司提出了基于外接主成像物镜和视场光阑的方案(Geelen等,2013),主成像物镜将远处的目标成像到视场光阑,视场光阑上的图像再经微透镜阵列成像到集成了多层膜滤光片阵列的探测器上。这一方式,演示了在日光条件下30帧/s的采集速度,在更高光照水平下,如机器视觉应用中,采集速度可达340帧/s。在前述多孔径光谱成像中,都需要阵列排布滤光片阵列,常用的多层膜滤光片阵列制作难度大,Hubold等人(2018)提出了多孔径渐变滤光片光谱成像技术,其中渐变滤光片置于微透镜阵列上方,且互相倾斜一定角度θ放置,这种方式光谱通道数与微透镜阵列个数相同,且具有体积小、质量轻和成本低的优点,但这一方式需要假设光谱在较短的距离内近似不变,当单个微透镜覆盖的渐变滤光片区域较大时,光谱重叠明显,光谱分辨率下降,在演示中,实现了450~850 nm波段内400×400×66大小的3D光谱数据立方体。

1.4.2 分视场光谱成像

分视场光谱成像技术的种类很多,包括基于切片镜的分视场光谱成像(Content等,2013)、基于可变性光纤束分视场光谱成像(Gat等,2006)、微透镜阵列分视场光谱成像(Dwight和Tkaczyk,2017)、针孔阵列分视场光谱成像(Bodkin等,2009)、图像映射光谱成像(Gao等,2010),以及像素滤光片阵列光谱成像(Geelen等,2014)。其中像素级滤光片光谱成像直接将滤光片阵列集成到探测器上,具有体积小、集成度高等优点,是未来最有望用于手机等终端的光谱成像技术。早期的像素级滤光片阵列就是人们日常生活中普遍使用的彩色CMOS探测器,Geelen等人(2014)将其推广,制作了4×4阵列16通道的像素级光谱滤光片。但像素级滤光片多基于多层膜体系制作,成本极高;像素级滤光片本质上是通过牺牲空间分辨率换取光谱分辨率,光谱通道数越多,空间分辨率越小;光谱通道数越多,单个光谱通道的能量就越小,信噪比越低。为了解决像素级滤光片制作难度的问题,研究者提出了基于FP腔的像素级滤光片光谱成像(Huang等,2017;Williams等,2019),利用FP腔的窄带透射特性且简单的“高反层—中间透射层—高反层”结构,使用灰度光刻直接一次性做出不同谱段的滤光片阵列,制作难度大幅降低。为了解决光谱通道数越多,通道能量下降致使信噪比越低的问题,研究者又提出了基于宽谱像素级滤光片的光谱成像技术:例如Bao和Bawendi(2015)使用量子点可调的谱段吸收特性实现了宽谱段像素级光谱成像,超表面、银纳米线等作为一种在近乎平面空间内对光波进行超控的新材料,也是一种理想的宽谱滤光片实现形式,如Craig等人(2018)和Meng等人(2019)展示的可以用于红外波段的芯片级光谱成像技术,Wang等人(2019c)展示了基于光子晶体的宽谱段光谱成像,Yang等人(2019)和Cadusch等人(2019)展示的基于银纳米线的宽谱段光谱成像。为了解决像素滤光片阵列光谱分辨率与空间分辨率相互制约的问题,研究者发展了基于压缩感知的光谱重建技术(Nie等,2018;Kaya等,2019),通过探究每个像素的最佳光谱响应曲线(Fu等,2022;Stiebel和Merhof,2020;Sun等,2021a),达到仅使用较少的光谱像素规模,实现超光谱探测能力,打破光谱分辨率与空间分辨率的制约关系。

1.4.3 孔径/视场编码光谱成像

孔径/视场编码光谱成像属于基于压缩感知理论进行光谱图像重建的技术,利用空间光谱数据立方体的稀疏性,比基于像素级滤光片阵列中利用压缩感知的维度更多、更全面,主要研究的侧重点为编码方式、重建算法等。最典型的孔径/视场编码光谱成像系统是CASSI(coded aperture snapshot spectral imaging)系统(Brady和Gehm,2006;Wagadarikar等,2008),它的主要问题之一是由于利用了对自然场景的稀疏假设,不可避免地会出现重建误差,且重建算法非常复杂,无法实时对高光谱数据进行重建。Wang等人(2017, 2019b)提出了一种基于互补观测的双相机成像系统,并提出了将数据与先验知识相结合的高光谱图像重建算法。CASSI系统的主要问题之二是体积太大,不适合集成,Baek等人(2017)通过直接在标准单反相机镜头上增加一个色散棱镜,实现了缩小系统体积,但仅适合恢复边缘处的光谱信息,导致重建问题高度病态,光谱重建精度较差。Golub等人(2016)则使用单个散射片替换棱镜作为色散元件,进一步减小了体积,且通过与彩色CMOS探测器结合可以提高光谱重建的精度。进一步为减小体积,Jeon等人(2019b)提出了基于单个衍射光学元件同时作为成像镜头和色散元件的方法,首先通过设计一个可以产生波长相关的点扩散函数进行孔径/视场联合编码,然后通过基于模型的深度学习算法重建高光谱图像。与直接使用单个衍射光学元件类似,使用单个强色散的散射片也可以实现光谱成像(Sahoo等,2017;French等,2017),这样的系统本质上是利用了散斑对空间光谱数据立方体进行编码,但这类系统光谱分辨率严重依赖于不同波长的散斑之间的相关性,光谱分辨率通常较低。针对以上问题,Monakhova等人(2020)提出了散射片结合像素级滤光片阵列的技术方案,既保留了单散射片的轻薄特性,也通过像素级滤光片阵列确保了高的光谱分辨率。特别地,该方法还通过解码散斑编码的空间信息,可以避免像素级滤光片阵列光谱成像必然带来的空间分辨率降低的问题。

1.5 无透镜成像

随着微纳光学和计算成像等领域的快速发展,平面光学元件以其独特的紧凑型光学结构优势,正发展成为传统折、反射式透镜(如照相物镜、望远镜和显微镜等)的替代光学方案(Capasso,2018)。典型的平面光学元件有衍射光学元件(diffractive optical elements, DOEs)和超透镜(Metalens)(Yu和Capasso,2014;Banerji等,2019)。尽管此类平面光学元件将传统的透镜尺寸压缩到微观的波长或亚波长结构,其成像原理仍是点对点的映射关系,其系统极限尺寸仍受限于透镜的焦距、孔径和视场等核心指标。为进一步压缩成像系统的尺寸,近年来涌现出的一种无透镜成像技术提供了一种全新的思路(Boominathan等,2016)。

无透镜成像摒弃了传统透镜中点对点的映射模式,而是将物空间的点投影为像空间的一种特殊的点扩散函数,不同物点在像面叠加,形成了一种人眼无法识别但计算算法可以复原的原始数据。这种无透镜成像方式在光学硬件上对图像信息进行编码,并在计算算法中解码,因此形成了光学和算法的联合设计。

无透镜成像最早起源于仿生复眼的研究(Cheng等,2019b;Lee等,2018a)。在自然界中,脊椎动物的视觉(如人眼)通常采用透镜式的点对点映射成像,而多种生物(如昆虫等)则通过复眼的形式感知自然环境(Schoenemann等,2017)。这种复眼结构包含了几十甚至几百个小眼(ommatidia)单元,包含角膜、晶椎、色素细胞和视网膜细胞、视杆等结构。复眼结构采集到的光线信息最终通过视神经传递到大脑进行处理,获取自然界的环境信息。受此启发,科学家模仿复眼结构制造了多种无透镜成像器件。然而,这种直接模拟昆虫复眼的无透镜成像形式仍然具有结构复杂、分辨率低等缺点,无法满足人们对紧凑型高质量成像日益增加的需求。

近年来,随着计算成像技术的不断进步,图像复原算法在成像系统中的作用日益提升。人们开始以计算的思维来设计无透镜成像系统,而不是对自然复眼结构的简单模仿。这主要体现在,无透镜光学系统的点扩散函数应与图像重建算法协同设计,使得光学系统中编码的图像信息能够被算法有效解码。基于该思路,近年来涌现出多种振幅型和相位型的平面无透镜成像系统,大大提高了复眼型无透镜成像的分辨率,同时保持极高的结构紧凑性。

无透镜系统主要包括平面光学元件的设计和图像重建算法两个方面。其光学元件设计可分为振幅型和相位型,通常采用启发式的优化设计方法,寻求利于算法重建的点扩散函数,根据光的衍射定律反向设计对应的振幅或相位型光学元件,典型设计包括FlatCam(Asif等,2017),DiffuserCam(Antipa等,2018),PhlatCam(Boominathan等,2020)和Voronoi-Fresnel(Fu等,2021)等。图像重建算法则一般采用正则化的逆问题求解算法(如采用全变分(total variation, TV)的正则化项),以及针对大模糊核的基于深度学习的图像重建方法。

振幅型无透镜成像采用二值的黑白图案对光波进行调制。早期的二值图案采用简易的随机分布的小孔阵列或均与冗余阵列(uniformly redundant arrays, URA),依赖图案在光传播过程中的阴影效应在图像传感器上制造一定图案样式的点扩散函数。这种简易的点扩散函数通常具有较差的数值稳定性,不易得到高质量的重建图像。DeWeert和Farm(2015)提出了一种可分离的双托普利茨矩阵(Doubly-Toeplitz)掩膜版,产生的点扩散函数降低了逆问题的病态性,从而获得了更好的图像质量。Asif等人(2017)深入研究了不同形式的掩膜设计及其数值稳定性,并提出了一种由两个1维M序列编码进行外积得到的2维可分离掩膜设计,称为FlatCam,这种掩膜设计的优势不仅在于更好的数值稳定性,同时易于实现和标定,是振幅型无透镜系统的较优设计。此外,Tajima等人(2017)提出了采用二值菲涅尔波带片作为掩膜版,利用摩尔条纹效应产生圆环状的图案,并利用其对深度独立的特性,得到了能够进行重聚焦的无透镜光场成像系统。然而,振幅型无透镜成像存在一个内在缺点,其允许的光通量至多为50%,因此损失了一部分的光强,能量利用率偏低。

相位型无透镜成像能够充分利用所有入射光,对入射光波的相位进行调制,从而在探测器面生成光强调制的图案。Gill(2013)提出一种奇对称的二值相位(0和1)衍射光栅,其菲涅尔衍射图案能够产生螺旋形的点扩散函数,通过在2维平面周期性平铺这种单元二元光栅结构,形成螺旋光斑阵列图案,称为PicoCam,能够实现高通量的无透镜成像。由于二值相位光栅的衍射效率仍然有限,Antipa等人(2018)提出采用随机散射片(diffuser)生成焦散图案作为点扩散函数的无透镜成像方案DiffuserCam,能够产生高对比度的点扩散函数,并且其形状随着焦深的变化呈现缩放特性,形成了深度依赖的点扩散函数。利用此性质,DiffuserCam能够实现单帧图像的3维无透镜成像功能。该方法采用的散射片通常取自透明胶带等元件,因此是一种欠优化的光学器件。为了使这类相位型无透镜成像元件的点扩散函数的结构更有利于算法重建,Boominathan等人(2020)总结了点扩散函数的几个有益特征,即图形稀疏、对比度高、具有多样化的方向滤波特性。根据这些特征,作者提出了采用将Perlin噪声二值化生成的团作为目标点扩散函数,通过迭代正向和反向菲涅尔衍射过程优化设计连续的相位函数以生成所需的点扩散函数。这种无透镜系统称为PhlatCam,产生的点扩散函数形似等高线,具备作者所提出的所有有益特征。然而,这一类的点扩散函数都是基于启发式的方法,其最优性并非通过客观的指标得到,而是从实验中总结而来。Fu等人(2021)提出了一种基于调制传递函数的傅里叶域指标,调制传递函数体积(modulation transfer function volume, MTFv),能够客观衡量光学系统中采集的信息量多少,MTFv值越大,则系统编码的信息量越多。利用此原则,作者设计了一种类似昆虫复眼的无透镜相位函数,称为维诺—菲涅尔(Voronoi-Fresnel)相位。该相位函数由理想透镜的菲涅尔函数作为基本单元,在2维空间中按照维诺图的形式紧密排列。所生成的点扩散函数同时具备稀疏性和高对比度的特性,而且每个维诺单元的多边形孔径在傅里叶域代表了方向滤波,且具有更紧凑的空间分布。另外,该方法采用MTFv作为客观指标,优化这些基本单元的分布,所得到的相位函数具有更优的频域特性和光学性能。

无透镜成像系统的原始数据是非可视化的图像数据,需要采用相应的图像重建算法对图像进行复原。目前常用的图像复原算法可分为传统正则化求解的退卷积(deconvolution)算法,以及采用数据驱动的深度学习算法。在初期的DiffuserCam,PhlatCam和Voronoi-Frensel等几类无透镜成像系统的图像重建中,作者采用全变分(TV)正则化的退卷积优化方法,采用ADMM(atternating direction method of multipliers)优化框架,进行高效的图像重建。这种方法通常受到成像模型不精确、信噪比低和杂散光干扰等因素的影响,图像质量受限。Monakhova等人(2019)以DiffuserCam为样机,采集了大量无透镜成像数据库,并提出采用unrolled ADMM结构的3种深度学习框架Le-ADMM, Le-ADMM*,以及Le-ADMM-U对DiffuserCam数据进行复原,取得了较好的效果。Khan等人(2022)以FlatCam和PhlatCam为样机,提出采用两步法重建图像的FlatNet模型。原始图像首先经过第1层训练网络得到初步的重建图像,然后通过第2个网络进行感知增强,使得重建图像具有更好的人眼感知质量。为解决高质量无透镜成像数据集较难大规模采集的问题,Monakhova等人(2021)在深度图像先验(deep image prior)工作的基础上提出了一种无需大量训练而实现图像高质量复原的方法。该方法采用图像生成网络寻求无透镜图像中的抽象先验,建立原始图像的高维度抽象先验网络,再以成像模型生成探测器上得到的原始数据,并与实际采集的原始数据进行比较,求解网络参数的优化问题,最终实现无训练的图像重建方法。为简化点扩散函数难以准确标定的问题,Rego等人(2021)以DiffuserCam为例,利用生成对抗网络(generative adversarial network, GAN)结构,同时表征原始图像和点扩散函数,再以成像模型为基础,对比生成的原始图像和采集的原始图像,通过大量训练求解高质量的清晰图像信息。

1.6 低光照成像

低光照成像近些年取得了较大进展。按照输入分类可以分为单帧输入、多帧输入(burst imaging)、闪光灯辅助拍摄和传感器技术:1)单帧输入经典的方法有各种滤波器(如高斯滤波器、中值滤波器和双边滤波器)、非局部均值滤波(non local means,NLM)、3维块匹配滤波(BM3D)、基于字典学习或小波的去噪方法等。Plötz和Roth(2017)在收集的真实数据上进行评测发现,虽然很多方法在合成的带高斯噪声的数据上比BM3D好,但在真实数据上BM3D依然表现最佳。Lefkimmiatis(2018)使用神经网络来实现BM3D的每一步。Abdelhamed等人(2018)提供了基于手机相机的真实数据集。研究表明,RAW域去噪(Chen等,2018a, 2019;Jiang和Zheng,2019)比经过去马赛克等处理后的RGB图片效果更佳,其原因在于经过后处理算法,图片中的噪声分布变得更复杂,因此更难去除。当仅有经过后处理的图片时,Brooks等人(2019)将其逆处理回RAW域去噪获得了更佳效果。2)多帧输入已广泛应用于手机中,如谷歌Pixel手机、iPhones等。Hasinoff等人(2016)和Liba等人(2019)对谷歌的整个算法流程做了充分介绍。Mildenhall等人(2018)针对多帧去噪提出核预测网络,Xia等人(2020)利用核之间的冗余对核预测网络进行优化,以此来提高重建质量和速度。3)闪光灯可以用来增加场景的照度来提高照片质量。其中,最简单的方法为使用白色闪光灯。白色闪光灯的光谱和环境光光源的光谱不一致会使得前景和背景的色调不一致,故而iPhone提出使用“True-tone”闪光灯,使得闪光灯的光谱接近于环境光。闪光灯的使用会引入问题,限制了其广泛使用。如图像光照不自然、刺眼、距离有限等。针对这些问题,研究人员也提出了相关方案,如闪光/无闪光两张照片融合(Petschnigg等,2004;Eisemann和Durand, 2004, Xia等,2021),使用不可见光如红外/紫外(Krishnan和Fergus,2009),使用人眼响应不强烈的深红色闪光灯(Xiong等,2021)。然而距离有限还亟待解决,因为物理上的限制,光随着距离的平方递减。4)对传感器技术进行提高或使用新型传感器成像,例如使用背照式传感器,利用微透镜提高填充率收集光线,使用SPAD传感器成像(Ma等,2020)。

1.7 主动3维成像

主动3维成像常用的方法有结构光和Time-of-flight(包括iTOF和dTOF)。虽然原理有所不同,如结构光使用三角法得到距离,TOF测量光的飞行时间,其面临的挑战是类似的:1)易受到环境光的干扰,例如太阳光产生的光子噪声(非偏置)会把信号淹没。2)有非直接光的情况,准确度会受到极大影响。结构光和TOF均假设光发射出后,仅单次被物体反射回接收设备,这样的光可称做直接光;若物体有凹面,或物体半透明,或传播媒介有很多小微粒使光产生散射,这些光可统称为非直接光。此时3维成像会产生较大误差。3)多设备串扰近些年取得了很大的进展,以下将重点阐述对环境光和非直接光干扰的解决方法,部分方法可同时适用于结构光和TOF。

对于环境光(主要为太阳光),方法有以下几类(可同时使用):1)使用光学窄带滤波片(Padilla等,2005)。因太阳光是广谱的,而发射光可以是窄带的,比如使用850 nm的激光,接收端使用850±5 nm的滤波片。滤光片带宽并非越窄越好,要留有裕量,因激光器的波长会随着温度变化,相机端的滤波片进来的波长与入射光的角度也有关系等。2)使用偏振片(Padilla等,2005),因太阳光没有主偏振方向。3)使用太阳光比较弱的波长,如近红外波段的938 nm,或短波红外波段(900 nm~2.5 μm)。太阳光里的某些短波红外光很少,且短波红外对人眼更加安全,可适当加大功率,故而短波红外方案非常有吸引力,但无法使用硅探测器,需要使用InGaAs探测器。目前InGaAs面阵阵列分辨率较低且成本较高。线阵InGaAs探测器分辨率较高且成本低。Wang等人(2016b)提出使用基于线阵阵列传感器的结构光装置,对偶结构光DualSL,可以通过1维传感器得到2维的深度图。4)提高发射端功率,但会增加发射器的体积、重量和散热难度,且受到人眼安全限制。5)集中—扫描机制。微软Kinect和英特尔RealSense都是将光分散照明场景,能量微弱。集中—扫描机制将光集中成点或线,然后进行扫描。传统的点扫描集中程度最高,速度最慢,时间分辨率极低;线扫描略强,但时间分辨率依旧不理想。Matsuda等人(2015)提出使用点扫描搭配事件相机(event camera)以此达到实时。Mertz等人(2012)提出使用一个小的便携激光投影仪搭配短时曝光相机实现太阳光下线扫描,但是帧率较低;EpiScan3D(O′Toole等,2015)提出,可以将激光扫描投影仪和卷帘快门相机摆正,在极线上对准,使得激光投影仪的每一行的极线恰好对应相机的每一行,该方法使用普通功率的投影仪,可对点亮的灯泡进行3维建模,并达到实时;EpiTOF则是该方案的TOF版本(Achar等,2017)。Gupta等人(2013)提出了一种扫描机制,可以根据环境光的大小在线扫描和面扫描间连续设置。Wang等人(2018)提出使用线扫描激光搭配线扫描相机,通过控制两者的相对扫描速度扫描一个空间曲面,称做光幕(light curtain),实现了太阳光下的实时避障。Bartels等人(2019)则是使用线扫描激光搭配卷帘快门相机,以此提高进光量和扫描速度。6)基于SPAD的TOF方法,SPAD在太阳光下会受较多光子干扰,难以测量光子发射时间,又名光子堆积畸变(photon pileup distortion)问题,可通过选择最优的中性密度滤光片(Gupta等,2019a),或在SPAD的曝光窗口和发射时间设置随机延时(Gupta等,2019b),一定程度减弱光子堆积影响。

对于非直接光,很多方法受到Nayar等人(2006)启发,通过投影仪打出空间上高频图案来分离直接光和非直接光的影响,可在计算深度前分开直接光和非直接光,然后用直接光计算深度。也可使用高频图案非直接光部分自动抹平,从而不会影响深度计算。对相机的像素进行分类,然后自适应迭代(Xu和Aliaga, 2007, 2009);使用多重照明来减少图案数量(Gu等,2011)。另外,使用高频图案让非直接光抹平或相消的方法有:调制相移(Chen等,2008)使用高频图案来作为载波、使用高频单个图案(Couture等,2011)、修改传统的灰度编码(Gupta等,2011)、微相移(Gupta和Nayar,2012)使用多个邻近的高频图案以及嵌入式相移在高频图案中嵌入低频(Moreno等,2015)等。还有方法是在光学上阻止非直接光,如O′Toole等人(2014)利用投影仪和相机的光学上的极线限制,以及通光率得到改善的Episcan3D(O′Toole等,2015)。对于iTOF,以上方法均有借鉴意义,Naik等人(2015)使用直接全局分离的结果来指导iTOF的去多径干扰,Gupta等人(2015)使用高频来让多径的干扰相互抵消,EpiTOF是Episcan3 d的TOF版本。对于半透明的物体,Chen等人(2007b)提出使用偏振光来做结构光,直接光易维持偏振性,而散射光易失去偏振性。dTOF没有非直接光问题的困扰,但是对传播媒介的散射,比如在烟、雾中,结构光、iTOF和dTOF都面临挑战。相关方法一般基于媒介散射特性可分为:1)与邻域比较,结构光对应着空间上的曲线(Narasimhan等,2005),TOF对应着时间上的曲线(Satat等,2018);2)在光路上消除杂光,如光幕(Wang等,2018);3)使用更长的波长,如短波红外(如DualSL(Wang等,2016b))和长波红外(Erdozain等,2020)。最后,使用深度学习来观察含有错误的深度图并纠正,也取得了很好的效果(Marco等,2017)。

1.8 计算摄影

1.8.1 自动白平衡

白平衡是ISP(image signal processor)中重要的一环。人眼在观察图像时,会自动修正光照颜色对物体造成的色差。因此,在数字成像的过程中,为了还原物体在人眼中观察到的色彩,相机也同样需要从图像中估计出光照的颜色,并加以修正。这种过程称为自动白平衡。

自动白平衡的方法主要分为两类。传统的白平衡算法的输入一般仅为单张图片,算法通过分析这张图片的颜色分布估计出图片中场景的光照,从而实现颜色的校正。Buchsbaum(1980)提出了“灰色世界”(grey world)模型,该模型假设图像中的物体的平均颜色为灰色,从而对图片的颜色进行校准。Finlayson和Trezzi(2004)对“灰色世界”模型进行了拓展,使用明科夫斯基测度计算平均颜色,并通过实验发现使用

另一方面,使用机器学习的自动白平衡算法通常需要较为庞大的数据集作为辅助。通过训练机器学习模型学习大量已经完成颜色校准的图片中的规律,从而能够更好地对新拍摄的图片进行白平衡。Brainard和Freeman(1997)以及Gehler等人(2008)使用贝叶斯理论对物体材质和光照颜色等先验知识进行建模。Finlayson等人(2006)将白平衡问题转化为色域映射问题进行求解。Barron等人(Barron,2015;Barron和Tsai,2017)将图像中像素的颜色映射到对数色彩空间中,并使用模式识别的方法进行白平衡。Shi等人(2016c)提出了一种新的深度神经网络结构来甄别与筛选图像中可能的光照颜色;而Hu等人(2017)则通过深度神经网络识别出图像中易于推测出真实颜色的部分,从而计算出光照的颜色。

1.8.2 背景虚化

由于移动端的成像设备存在大小、厚度等诸多限制,使用手机镜头拍摄到的图片普遍具有很长的景深,即很难拍摄出专业相机中背景虚化的效果。近年来,有许多文章着手于使用后期处理来人工模拟浅景深、虚背景的效果。Shen等人(2016a,b)尝试将人像图片分割成前景与后景,并对后景进行均匀虚化;Zhu等人(2017a)进一步提出了加速人像前后景分割的算法。然而,对后景进行均匀虚化并不真实,因为在现实中,距离越远的物体会越模糊。

为了实现更加真实的景深模拟,学者们尝试从单幅图像中预测物体深度(Eigen等,2014;Liu等,2016;Zhou等,2017;Luo等,2020)。然而,单幅图像预测出的深度普遍存在较大误差。谷歌Pixel手机团队(Wadhwa等,2018)提出使用手机相机中的“双像素”(dual pixel)更好更快地进行深度预测,从而模拟出更加真实的背景虚化。Ignatov等人(2020)采集了大量使用单反相机拍摄的不同景深的图片,并提出使用端到端(end-to-end)的方式直接用神经网络对图像进行背景虚化,从而跳过深度预测的步骤。

1.8.3 连拍摄影

受移动端相机传感器大小的限制,由手机直接拍摄的照片通常存在许多噪点。若通过提高曝光时间降低噪音,则拍摄的照片会产生动态模糊。因此,连拍摄影(burst photography)逐渐成为计算摄影的主流。连拍摄影最先由谷歌Pixel手机团队(Hasinoff等,2016)提出,其算法流程大致如下:首先连续拍摄多张曝光时间较短的图片,以保证照片较为清晰且没有过度曝光;之后通过光流算法(Lucas和Kanade,1981)将连拍的照片像素进行对齐;最后将多张对齐的照片像素合成,生成最终的图片。该算法可以在移动端3~4 s内完成,与单次拍摄相比,连拍摄影在保证图像清晰的情况下,尽可能消除由相机传感器大小所造成的噪音,极大地提升了手机摄影的质量。

连拍摄影在之后得到了较大的发展。Mildenhall等人(2018)以及Godard等人(2018)提出使用神经网络对连拍摄影进一步进行降噪处理;Aittala和Durand(2018)使用神经网络实现了连拍摄影去模糊;Kalantari和Ramamoorthi(2017)使用连拍的多张低动态范围图像合成出了高质量的高动态范围图像;Liba等人(2019)进一步改进了谷歌Pixel手机中的连拍摄影技术,实现了在超低光照的条件下进行高质量拍摄;Wronski等人(2019)进一步使用连拍摄影的方式实现了图像超分辨率。

2 国内研究进展

2.1 端到端光学算法联合设计

端到端光学算法联合设计的研究国内起步相对较晚,孙启霖团队提出端到端学习、光学编码的超分辨率SPAD相机(Sun等,2018, 2020b),利用衍射元件实现了最优的光学采样和高质量的深度、超快速重建。孙启霖团队提出了基于向量分解的端到端衍射光学设计,利用可微的衍射元件结合可微的神经网络来实现单次曝光高动态范围成像(Sun等,2020a)。顿雄团队提出了基于可微衍射元件的用于全光谱计算成像的旋转对称衍射消色差技术(Dun等,2020),可以在自然光照下解决现实世界中各种场景的精细细节和色彩逼真度。申俊飞团队利用变种U-Net来模拟模糊过程,实现了带有单透镜的端到端大景深成像(Liu等,2021)。侯晴宇团队使用快速可微光线追踪的端到端学习单镜头设计(Li等,2021)。香港中文大学(深圳)、点昀技术孙启霖团队于2021年首次实现了基于可微光线追踪的复杂透镜设计引擎,可直接构建光学参数与最终图像之间的可微关系(Sun等,2021b),将端到端相机设计推向了新的高度,未来有望颠覆传统的光学设计方式,引领计算成像进入光学、算法联合自动优化时代。

2.2 高动态范围成像

在产业化方面,受消费电子、工业和车载等产业需求的影响,国内图像传感器厂家如豪威、思特威、格科微和长光辰芯等对HDR图像传感器产品均有产品推出或相关预研。其产品多数集中在多次曝光融合、DOL/Staggered HDR等方案。

1) 多传感器融合HDR成像。多曝光HDR成像,通常会因鬼影检测不准确导致鬼影去除失败,而单帧参考图的鬼影去除方法会导致细节损失,张登辉和霍永青(2018)提出了多曝光HDR成像生成去鬼影的HDR图像。根据两幅不同曝光图像累计直方图拓展原则,贺理等人(2020)对两幅图像分别进行动态范围拓展,并采用像素级融合方法实现对拓展图像序融合。

2) 基于光强调制类HDR成像。结合微通道板增强器和图像传感器,潘京生等人(2017)实现了模拟和光子计数两种模式的HDR成像。利用固定积分级数的成像方法,并结合图像熵和灰度分布方差构造评价函数,孙武等人(2018)实现了推扫式遥感相机超满阱HDR成像。王延杰等人(2014)、吕伟振等人(2014)、何舒文(2015)、吕涛等人(2015)、冯维等人(2017)、Xu和Hua(2017)、Sun等人(2019)、Zhou等人(2019)均利用SLM(spatial light modulator)调制光强来实现HDR成像。

3) 多曝光图像融合。利用卷帘快门的空闲电路资源来缩短多次曝光帧间间隔,李晓晨等人(2013)提高了系统效率且加速了输出响应。为了实现实时的HDR图像融合,朴永杰等人(2014)对快速多分辨率金字塔分解融合算法进行了改进。胡燕翔和万莉(2014)使用整体亮度范围、局部对比度和颜色饱和度指导亮度融合来实现HDR图像融合。兼顾局部细节和全局亮度的融合权重函数,陈阔等人(2015)、江燊煜等人(2015)实现了较好的快速融合。针对噪声对HDR图像的影响,刘宗玥(2016)、陈晔曜等人(2018)提出了基于局部线性变换的色阶映射算法以及多曝光图像融合过程中的相关降噪算法。都琳等人(2017)利用基于色彩梯度的微分光流法来实现动态目标的HDR图像融合。李雪奥(2018)通过U-Net网络进行多曝光融合,加强了图像细节并一定程度去除运动影响。张淑芳等人(2018)采用主成分分析与梯度金字塔的HDR图像生成方法来避免生成图像的光晕和泛灰问题。李洪博等人(2018)利用转换增益、黑电平偏移参数间接获取融合系数,提高了相机的动态范围。吴蕊(2020)使用3层级联网络结构和长短期记忆网络与膨胀卷积提升了HDR图像生成效果。刘颖等人(2020)提出了基于亮度分区模糊融合的高动态范围成像算法。

4) 高动态范围图像重建。为了获取HDR场景的准确的亮度以及色度信息,Wu等人(2017a)提出了与CIEXYZ设备无关的基于相机色度法的色彩空间重建方法。针对图像过曝光和欠曝光区域细节易损失,常猛等人(2018)对图像过曝光和欠曝光区域利用双分支神经网络进行分别校正。Li和Fang(2019)提出了一种混合损失函数并使用通道注意机制来自适应地调整通道特征。叶年进(2020)提出基于深度学习的单帧LDR图像生成HDR图像的方法。Liu等人(2020)提出了一种防抖的单曝光HDR复原方法。Liang等人(2020)通过设计具有多分支特征提取和多输出图像合成功能的深度逆色调映射网络,实现了单次滤波LDR图像重建HDR图像的方法。Hou等人(2021)使用深度不受监督的融合模型重建高动态范围图像。Ye等人(2021)利用深度双分支网络的单次曝光高动态范围图像重建。

5) 色调映射。为了适应不同的图像亮度范围,芦碧波等人(2017)首先利用韦伯—费希纳定律将图像分区,用不同尺度的参数的对数映射来实现对不同区域图像的动态范围压缩,再根据比例融合。通过在CIE空间内对数亮度平均值划分亮度阈值分段压缩,刘颖等人(2018)实现了较好的细节和整体亮度。通过联合邻域强化连接和视觉皮质模型,李成等人(2018)实现了高视觉质量的HDR图像显示,对非均匀光照效果明显。王峰和严利民(2019)提出一种亮度分区和导向滤波相结合的色调映射算法。针对色调映射过程中亮暗区域不同的颜色偏移,冯维等人(2020)实现了对色度信息的自适应矫正。

2.3 光场成像

在光场3维重建方面,谭铁牛院士团队(Wang等,2018)设计了一种隐式多尺度融合方案来进行超分辨重建。张朔等人提出了光场空间超分辨率残差式网络(Zhang等,2019d)框架(ResLF)。为了实现光场子孔径图像的空间超分辨,Zhao等人(2020)提出了利用神经网络融合多尺度特征的光场图像超分辨方法。

在光场深度算法方面,Zhu和Wang(2016)在原先外极线图像算法的基础上优化了背景算法。Zhang等人(2016)提出了SPO(spinning parallelogram operator)算法,有效解决噪声、混叠和遮挡引入的问题,从而获取更准确的视差图。Zhang等人(2016)通过最优方向搜索的方法对EPI中直线斜率进行初始估计,取得了较优异的深度估计效果。Wu等人(2019a)利用EPI清晰的纹理结构,将角度超分辨率建模为基于CNN的EPI角度信息恢复问题,对复杂结构以及不同采集形式获得的光场图像的重建效果均很优异。施圣贤团队(Ma等,2019)提出了VOMMANet深度估计网络,有效、快捷且高精度地处理非朗伯体,无/少纹理表面。

Zhang等人(2019c)提出了光场相机标定方法,包括六参数的光场相机多投影中心模型。Zhang和Wang(2018)研究了多投影中心模型对二次曲线和平面的映射,在光场中重建共自配极三角形。张琦和王庆(2021)提出了一种基于离心圆共自配极三角形的光场相机标定方法。宋征玺等人(2021)针对环形光场的2维对极几何图特征轨迹不完整问题,提出了一种基于3维霍夫变换的环形光场3维重建方法。Zhang等人(2021a)将传统针孔相机的自校准技术拓展到光场相机,提出了一种光场相机自校准方法。施圣贤团队(赵圆圆和施圣贤,2020)根据高斯几何光学提出了用于3维形状测量的非聚焦型光场相机尺度校准算法,绝对精度达到微米级别。

在光场PIV(LF-PIV)方面,施圣贤团队设计并封装了基于六边形微透镜阵列的光场相机(Shi等,2016a, b)。针对示踪颗粒的光场3维重构问题,Shi等人(2017)提出了基于密集光线追踪的DRT-MART(dense ray tracing-based multiplicative algebraic reconstruction technique)重构算法,可精准地重构示踪粒子,同时丰富的视角避免了虚假粒子的产生。针对主镜头—微透镜耦合光学畸变的矫正问题。Shi等人(2019)和Zhao等人(2021)先后针对常规镜头光场成像和移轴镜头光场成像的光学畸变,提出了基于弥散圆模型的校准算法和基于蒙特卡洛方法的MART权重系数算法。与权重系数、基于光线追踪的算法相比,该算法进一步提高了测量精度和稳定性。

3维重构和光场畸变算法的发展,有效地推动了这一新技术在多种复杂流动实验中的应用,包括逆压力梯度边界层(Zhao等,2019)、零质量冲击射流(Zhao等,2021)和超声速射流(Ding等,2019)。此外,系统的对比研究表明,单光场相机LF-PIV,在一定条件下可以达到与多相机层析PIV(tomographic PIV, Tomo-PIV)同等测量精度(Zhao等,2019)。

针对光场显微成像,清华大学戴琼海院士团队于提出了一个由摄像头阵列组成的光场显微成像系统,该系统能够实现基本的重聚焦、视角变换以及相位重建(Lin等,2015)。该团队近年提出一种数字自适应光学扫描光场互迭代层析成像方法(Zhou等,2019)。

2.4 光谱成像

国内在光谱成像,特别是快照式光谱成像研究上也有广泛研究,1)分孔径光谱成像。代表性的工作如Mu等人(2019)针对Hubold等人(2018)提出的多孔径渐变滤光片光谱成像技术存在光谱重叠的问题,提出了基于直接代数重构的解混方式,该方式不需要额外的优化运算,只是对探测器靶面图像进行重新拆分和拼接,处理速度快,性能上,该技术实现了380~850 nm波段内80个连续光谱通道。2)分视场光谱成像。代表性的工作如Song等人(2021)和Zhang等人(2021b)在宽谱像素级滤光片成像技术上提出的深度学习的宽谱滤光片编码光谱成像技术,他们通过构建宽光谱编码1光谱成像光谱编码和深度学习重建解码一体化优化模型,实现了在指定宽光谱物理实现方式下最佳的宽光谱编码曲线,并且通过深度学习来直接重构每个像素点的光谱信息,实现了光谱重建速度提升7 000~11 000倍,光谱重建对噪声的鲁棒性提升10倍。3)孔径/视场编码光谱成像。代表性工作如南京大学提出的棱镜—掩膜调制的光谱视频成像方法和原型系统(prism-mask modulation video imaging spectrometer, PMVIS)(Cao等,2011, 2016),通过充分利用棱镜的色散作用和掩膜的稀疏采样完成光谱视频实时采集的硬件设计和实现,大幅提升信息获得自由度,与国外提出的CASSI原理不同,PMVIS提出了并行光路的思想,通过具有高空间分辨率的RGB视频和低空间分辨率光谱视频融合处理,最终得到同时具有高空间和高光谱分辨率的视频,破解高空间和高光谱分辨率难以兼得的固有矛盾。

2.5 无透镜成像

国内在开展无透镜成像工作方面也取得了很大进展。Wu等人(2020a)采用菲涅尔波带片作为实现元件,在非相干光照明的情况下实现了单帧图像的高信噪比图像重建。以内嵌全息图的观点,采用压缩感知的方法解决图像重建中的孪生图像问题。Cai等人(2020)采用散射光学元件,在DiffuserCam的基础上进一步探讨散射介质对光场的编码作用,并开发求解光场图像的逆问题算法,能够实现利用散射元件的无透镜多视角成像。

2.6 低光照成像

国内的企业和高校在低光照成像方面开展了系列优秀工作。企业如华为手机的夜景模式令人惊艳,采用的方法包括RGB相机+灰度相机融合,使用RYYB阵列而不是传统的RGGB来获得更多的光,使用更长的burst来提高融合后的图片质量,以及使用优化的图像增强算法提高图像观感。高校如Wei等人(2020)通过对RAW域数据进行精准噪声建模来生成数据,以此训练的网络在真实数据上也表现优异;在使用闪光灯的方法中,为了解决闪光灯图片的色调苍白问题,提出了闪光和无闪光的融合方法。在算法方面,Yan等人(2013)提出基于优化的方法,用一个中间变量尺度图(scale map)来描述闪光灯下图的边缘与非闪光灯下图的边缘的区别;Guo等人(2020)提出双向指导滤波muGIF,在指导去噪的同时避免只有存在于闪光灯下图像中的信息渗透。

2.7 主动3维成像

国内在结构光和TOF上的研究较多,但鲜有处理环境光干扰和非直接光干扰方面的工作。非直接光主要是指光在离开发射器后,在场景中反射了两次或以上,再回到相机。如果相机的时间分辨率足够大,达到皮秒级别,则可以观察到光的飞行,这样的超高帧率成像称做瞬态成像。通过分析瞬态成像,可以将直接光和非直接光分离。清华大学戴琼海院士和刘烨斌团队在这方面做出了突出工作。比如利用iTOF扫频(根据“频率—相位”矩阵)得到数据,并且利用非正弦特性得到高频段信息,从而实现瞬态成像(Lin等,2014, 2017;Wang等,2021)。对于结构光中的多径干扰,Zhang等人(2019e)提出使用多个频率的图案来解决一个相机像素既看到前景又看到背景的情况(一个相机像素对应两个投影仪像素);Zhang等人(2021c)认为一个相机像素可能看到多个投影仪像素,用信号稀疏的先验知识的约束得到清晰的深度边缘。

2.8 计算摄影

国内科研机构对于计算摄影学的研究起步较晚。在自动白平衡方面,Shi等人(2016c)提出了一种新的深度神经网络结构来甄别与筛选图像中可能的光照颜色;Hu等人(2017)则通过深度神经网络识别出图像中易于推测出真实颜色的部分,从而计算出光照的颜色。在背景虚化方面,Shen等人(2016a,b)使用神经网络将人像图片分割成前景与后景,并对后景进行均匀虚化;Zhu等人(2017a)进一步提出了加速人像前后景分割的算法。在连拍摄影方面,Tan等人(2019)提出了一种新的残差模型(residual model)对连拍摄影进行降噪。

3 国内外研究进展比较

在端到端光学算法联合设计方面,特别在基于可微光线追踪的复杂透镜的端到端设计方面,点昀技术(深圳、南通)有限公司已率先突破了各环节联合优化壁垒,同时该公司亦拥有全套可微衍射端到端设计及其应用,并处于国际领先地位。但当前主流的端到端光学算法联合设计多出自国外研究团队,国内在相关领域仍需加强。但国内相关成像产业发达,尤其是手机、工业和车载等领域,新技术的发展往往容易得到快速的产业化应用,并反向推进学术进展,形成良性循环。

在高动态范围成像方面,国内外产业界研究主要集中在多次曝光融合、滤光片光强调制或新型像素结构等方法,学术界则不拘泥于量产需求,方法新颖且形态各异。针对消费电子等领域的HDR成像应用,主要集中于手机厂商多次曝光融合方案或Staggered HDR传感器,除华为Mate和P系列外,均使用高通平台,然而平台价格较贵,定制周期较长,国内高性能图像处理平台较国外仍有较大差距,如苹果、三星等均有自研平台。就目前来看,静态场景HDR成像的捕获和处理已经日臻成熟,但动态场景HDR成像仍有巨大的挑战。从算法上,目前国内外差距正逐渐缩小,部分技术已达到国际先进水平。从成像器件上,目前国内外仍存在较大差距,大部分受限于半导体工艺、设计迭代周期等问题。从算法计算平台上,国内仅个别企业保持国际前列,其他厂商均依赖于国外厂商处理器产品。从新型方法上,国内已出现国际领先的技术,如点昀技术孙启霖团队(Sun等,2020a)利用衍射元件和深度学习算法实现了相关的动态HDR解决方案。

在光场成像方面,国外在光场发展初期进行了较为深入的探索,尤其是美国、德国率先展开了光场基础理论的研究和完善,逐渐搭建了光场深度估计、光场超分辨率和校准等方面的理论框架和基石。美国Lytro、Google和德国Raytrix同时也将光场成像应用于消费端、专业级和工业级,让光场逐渐走进大众的视野。国内科研机构和院所如清华大学、中国科学院自动化研究所、上海交通大学和西北工业大学等在国外研究的理论框架基础上,针对特定需求,不断深入开拓,在光场算法理论、光场应用上突破创新。从算法上,目前国内外差距正逐渐缩小,部分技术已达到国际领先水平。当美国Lytro消费级光场相机于2018年退出市场后,德国Raytrix曾一度是国际上唯一的光场相机提供商。国内的奕目科技VOMMA于2019年打破了这一垄断,推出了系列自主工业级光场相机,并在芯片、屏幕模组和动力电池等3维缺陷产线检测获得了批量应用。基于中国更为广泛的产业需求和政府的大力支持,国内光场技术在以上领域的弯道超车基本已经显现。

在光谱成像方面,国内在光谱成像,特别是快照式光谱成像研究上也有广泛研究,在这一领域,国内学者的研究与国外相比基本处于齐头并进的状态,例如南京大学提出的PMVIS系统、浙江大学提出的深度学习驱动的宽谱滤光片编码光谱成像技术,以及西安交通大学提出的分孔径光谱成像,分别都是各自方向的代表性技术之一。但当前主流的光谱重建算法多出自国外研究团队,国内在光谱重建优化算法方面的研究还有待加强。

在无透镜成像方面,国内外的研究目前都主要集中在光学系统的优化设计和图像重建算法的研究上。国外的研究兼顾这两个方面,因此涌现出了诸如FlatCam,DiffuserCam,PhlatCam及Voronoi-Fresnel等不同形式的无透镜光学方案,而且这些无透镜系统进一步应用到了无透镜的单帧图像解析高速视频、多光谱成像和隐私保护的目标识别等领域。而国内的研究重心则在于利用现有的光学结构,研究高效的图像重建算法,并将其应用到2维图像重建和光场重建等逆问题上。由于深度学习的迅速发展,国内外目前都在利用深度学习重建无透镜成像系统方面进行相关的研究工作。

在低光照成像方面,通常以产业主导,比如谷歌、华为、苹果等。从性能来看,华为手机的夜景模式已达领先水平,尽管在图像增强方面,华为手机和苹果手机选择了略微不同的路线,前者追求观感,后者强调真实。从发表的研究成果看,国外的影响力仍大于国内,如谷歌的相关夜景文献正引领着行业发展。

主动3维成像方面,其鲁棒性方面的研究(环境光干扰、非直接光干扰等)目前仍由国外高校主导,比如美国哥伦比亚大学的Shree Nayar团队,沙特阿拉伯阿卜杜拉国王科技大学(King Abdullah University of Science and Technology, KAUST)的Wolfgang Heidirch团队及其衍生团队(美国斯坦福大学的Gordon Wetzstein团队,美国普林斯顿大学的Felix Heide团队,香港中文大学深圳孙启霖团队等),美国卡内基梅隆大学(Carnegie Mellon University, CMU)的计算成像组,包括Srinivasa Narasimhan,Matthew P. O′Toole,美国威斯康辛大学麦迪逊分校的Mohit Gupta团队,加拿大多伦多大学的Kyros Kutulakos团队等。其原因可能在于此工作一般需要硬件、软件结合,是光学、电子和机械方面的交叉学科,前期在光学仪器上的投入较大。

在计算摄影方面,与国外科研机构相比,国内的科研机构对于计算摄影学的研究起步较晚。因此,欧美的科研机构一般处于“领跑”的状态,而国内的科研机构更多的是属于“跟跑”的状态:大多数计算摄影的开创性工作由欧美科研机构开展,而国内的科研机构开展的主要是对其提出方法的改进与优化。另一方面,国内的手机厂商,如华为、荣耀、OPPO、小米和VIVO等,均在计算摄影方面投入了大量的研究,并发布了很多计算摄影相关的新产品。然而,这些企业发表的论文较少,希望未来这些来自工业界的研究机构能够通过论坛、白皮书和发表论文等方式更好地使科研人员跟进计算摄影的产业应用情况与发展状态。

4 结语

端到端光学算法联合设计旨在打破传统的成像系统中,光学、传感器、图像后处理算法以及显示处理等环节之间的壁垒,降低每个环节对人经验的依赖,为诸多场景提供傻瓜式的全新解决方案。端到端光学算法联合设计目前已有突破,可直接用于复杂透镜及其后处理之间的相互优化,且可兼容传统的光学设计以及后端处理,拥有诸多的应用,如镜片减薄、降低生产成本以及特殊功能应用。虽然该技术虽仍处于早期阶段,但已成为各大相关产业竞争焦点,短期看,未来几年便可得到相关产业应用。长期看,整体的趋势不仅是光学、传感器、算法和处理器的联合设计优化,而且朝着集成化发展,尤其是传感器自身对ISP的集成和光学配合,会大幅降低整个光学、摄像模组行业的生产成本和降低下游厂商对高性能处理器的依赖和提高自由度。此外,随着5G的发展,高速低延时通讯使得云端计算资源为手机计算成像部分需要复杂计算的应用提供了有力的保障,目前国内外主流厂商均已经开始布局。端到端成像技术的不断完善有望对整体成像产业链进行重新洗牌,突破成本和功能瓶颈。

高动态范围成像技术已逐步应用于消费电子、车载和工业等场景,且目前已经成为手机厂商的竞争焦点,亦是车规级图像传感器的必要条件。未来技术发展的关注点主要包括:1)以Two-bucket相机、大小像素结构等为代表的新型模拟图像传感器,极大提升了低照度下的灵敏度和信噪比;SPAD阵列、QIS以及北京大学黄铁军团队的Spiking Cameras(Zheng等,2021)等为代表的计数型图像传感器,在高度灵敏的同时,将模拟的电荷积分以数字计数器替代,具有极大的动态范围和设计灵活性。2) 目前来看,传统的算法和近年来基于深度学习的神经网络等,虽在最终效果上取得了巨大的成功,但仍然面临算力消耗较为严重、速度慢和延时大等问题,尤其是在手机等对计算功耗极为敏感的场景以及工业、车载对延时敏感的场景。故而有两种技术趋势,一是将算法通过ASIC(application specific integrated circuit)门级电路优化实现,流式处理结构可确保低功耗和低延时,适合移动端低功耗的要求,亦满足工业、车载和军事等对延时的苛刻要求,整体模组成本因器件减少会有做降低,当前点昀技术已实现了相关技术。另一种技术趋势为云计算,将终端算力、功耗受限的场景上传至云端进行计算、随着5G低延时传输的普及,当前多数手机厂商、汽车厂商已经在云端处理布局。

光场成像在过去几年中发展迅速,尤其是在工业领域、VR/AR、生物医疗和自动驾驶领域逐步开展产业应用。针对不同产业的应用场景,对光场成像提出了不同的发展要求。未来技术发展的关注点主要包括:1)高精度3维重建。随着光场逐步在工业场景得到应用,相对于其他3维测量技术,光场在同等测量视野下,精度成为最大限制和短板。2)对非朗伯体、无纹理区域和遮挡区域的3维重建。基于被动式成像的特性,光场的核心缺点和双目成像类似,对于非朗伯体、无纹理区域存在局限,部分光场算法精度会降低或者失效。3)真实光照场景的高质量重建。在虚拟现实领域,光场成像系统能够将多角度光照信息充分采集。现有算法重构的3维场景仍然存在不够真实的问题。4)高性能3维重建。在自动驾驶和生物医疗领域对于3维测量的实时性和计算效率仍然存在瓶颈。

光谱成像在过去的几年中得到了长足发展,特别是像素级滤光片阵列光谱成像技术,是未来极有可能在手机等移动端实现光谱成像系统落地的技术。但尽管超表面、光子晶体和银纳米线等平面滤光片器件极大地降低了像素级滤光片的制作的难度,这些新型滤光片器件普遍对角度敏感,使得真正用于实际场景时使用条件受限。未来迫切需要发展兼顾制备且对角度变化鲁棒的滤光片器件,并辅以面向应用场景的滤光片光谱曲线和光谱重建算法端到端协同设计。此外,具有实时成像能力(采集和处理均实时)的像素级滤光片光谱成像技术高空间和高光谱分辨率难以兼顾,尽管已有一些多光谱融合的解决方案,但融合的光谱精度仍有待提升,如何以较低的时间代价高精度地获取更高空间分辨率和更高光谱分辨率的高光谱图像,在未来仍是一个十分值得研究的问题。

无透镜成像系统具有极简的硬件结构,将图像的重建更多地转移到相对廉价的算法之上,因此在简化光学系统方面具有极强的应用价值。随着深度学习算法的更广泛应用,这种无透镜成像的方式有望在计算环境成像、隐私保护和物联网等诸多领域取得更广泛的应用。

低光照成像目前取得了很大的进展,相关研究成果已经得到广泛应用,但仍存在一些问题,如单帧去噪因信息损失过多有成像质量瓶颈;连拍去噪拍摄时间过长,运算复杂度也较高,在极弱光下依然无法使用;使用闪光灯的方法局限于短距离;新型传感器如SPAD的空间分辨率较低,像素较大。低光照下拍视频依然是难点、痛点。

主动3维成像在解决环境光干扰和非直接光干扰方面涌现了很多方案,大部分成果已经申请专利,有的已经投入使用。但是很多方案仅针对一个问题。对于3维成像,最终需要的是一个高空间分辨率和高时间分辨率、高深度准确性的传感器,且在复杂恶劣的环境下也能稳定工作,并且价格低廉。目前还缺少相关方法,短期内可能会出现现有的方法和深度学习的结合的方案。点昀技术即将推出相关高精度、低延时的RGBD相机。

计算摄影在过去10年经过了蓬勃的发展,大部分核心问题已经基本得到解决。然而,仍旧存在一些问题:1)大部分计算摄影算法,如连拍摄影算法,无法做到实时。因此其算法仅能在拍摄时进行,而无法运行在取景器中。如何加速这些计算算法,使其可以实时运行是一个亟待解决的问题。2)计算摄影目前的重点仍旧局限在照片拍摄,而不是视频拍摄。随着各个短视频平台的兴起,视频拍摄逐渐成为主流。因此,高质量视频拍摄(低光照、高动态范围)是一个值得研究的方向。3)目前的连拍摄影仍旧是在像素级别进行计算,如果将场景识别等方法结合到照片拍摄过程中,照片拍摄质量可能会进一步得到提升。

致谢 本文由中国图象图形学学会三维视觉专业委员会组织撰写,该专委会更多详情请见链接:http://www.csig.org.cn/detail/2696。

参考文献

-

Abdelhamed A, Lin S and Brown M S. 2018. A high-quality denoising dataset for smartphone cameras//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE: 1692-1700[DOI: 10.1109/CVPR.2018.00182]

-

Achar S, Bartels J R, Whittaker W L R, Kutulakos K N, Narasimhan S G. 2017. Epipolar time-of-flight imaging. ACM Transactions on Graphics, 36(4): #37 [DOI:10.1145/3072959.3073686]

-

Adelson E H, Wang J Y A. 1992. Single lens stereo with a plenoptic camera. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(2): 99-106 [DOI:10.1109/34.121783]

-

Aggarwal M and Ahuja N. 2001. Split aperture imaging for high dynamic range//Proceedings of 2001 IEEE International Conference on Computer Vision. Vancouver, Canada: IEEE: #10[DOI: 10.1109/ICCV.2001.937583]

-

Aggarwal M, Ahuja N. 2004. Split aperture imaging for high dynamic range. International Journal of Computer Vision, 58(1): 7-17 [DOI:10.1023/B:VISI.0000016144.56397.1a]

-

Aittala M and Durand F. 2018. Burst image deblurring using permutation invariant convolutional neural networks//Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer: 748-764[DOI: 10.1007/978-3-030-01237-3_45]

-

Akyüz A O, Fleming R, Riecke B E, Reinhard E, Bülthoff H H. 2007. Do HDR displays support LDR content? A psychophysical evaluation. ACM Transactions on Graphics, 26(3): #38 [DOI:10.1145/1276377.1276425]

-

Alain M and Smolic A. 2017. Light field denoising by sparse 5D transform domain collaborative filtering//Proceedings of the 19th International Workshop on Multimedia Signal Processing. Luton, UK: IEEE: 1-6[DOI: 10.1109/MMSP.2017.8122232]

-

Alain M and Smolic A. 2018. Light field super-resolution via LFBM5D sparse coding//Proceedings of the 25th IEEE International Conference on Image Processing. Athens, Greece: IEEE: 2501-2505[DOI: 10.1109/ICIP.2018.8451162]

-

Alghamdi M, Fu Q, Thabet A, Heidrich W. 2021. Transfer deep learning for reconfigurable snapshot HDR imaging using coded masks. Computer Graphics Forum, 40(6): 90-103 [DOI:10.1111/cgf.14205]

-

Alperovich A, Johannsen O, Strecke M and Goldluecke B. 2018. Light field intrinsics with a deep encoder-decoder network//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE: 9145-9154[DOI: 10.1109/CVPR.2018.00953]

-

Antipa N, Kuo G, Heckel R, Mildenhall B, Bostan E, Ng R, Waller L. 2018. DiffuserCam: lensless single-exposure 3D imaging. Optica, 5(1): 1-9 [DOI:10.1364/optica.5.000001]

-

Asatsuma T, Sakano Y, Iida S, Takami M, Yoshiba I, Ohba N, Mizuno H, Oka T, Yamaguchi K, Suzuki A and Suzuki K. 2019. Sub-pixel architecture of CMOS image sensor achieving over 120 db dynamic range with less motion artifact characteristics//Proceedings of 2019 International Image Sensor Workshop, [s. l. ]: [s. n. ]: 250-253

-

Asif M S, Ayremlou A, Sankaranarayanan A, Veeraraghavan A, Baraniuk R G. 2017. FlatCam: thin, lensless cameras using coded aperture and computation. Transactions on Computational Imaging, 3(3): 384-397 [DOI:10.1109/tci.2016.2593662]

-

Baek S H, Ikoma H, Jeon D S, Li Y Q, Heidrich W, Wetzstein G and Kim M H. 2021. Single-shot hyperspectral-depth imaging with learned diffractive optics//Proceedings of 2021 IEEE/CVF International Conference on Computer Vision. Montreal, Canada: IEEE: 2631-2640[DOI: 10.1109/ICCV48922.2021.00265]

-

Baek S H, Kim I, Gutierrez D, Kim M H. 2017. Compact single-shot hyperspectral imaging using a prism. ACM Transactions on Graphics, 36(6): #217 [DOI:10.1145/3130800.3130896]

-

Banerji S, Meem M, Majumder A, Vasquez F G, Sensale-Rodriguez B, Menon R. 2019. Imaging with flat optics: metalenses or diffractive lenses?. Optica, 6(6): 805-810 [DOI:10.1364/optica.6.000805]

-

Banterle F, Artusi A, Debattista K, Chalmers A. 2011. Advanced High Dynamic Range Imaging: Theory and Practice. Natick: A K Peters

-

Banterle F, Ledda P, Debattista K and Chalmers A. 2006. Inverse tone mapping//Proceedings of the 4th International Conference on Computer Graphics and Interactive Techniques in Australasia and Southeast Asia. Kuala, Indonesia: ACM: 349-356[DOI: 10.1145/1174429.1174489]

-

Bao J, Bawendi M G. 2015. A colloidal quantum dot spectrometer. Nature, 523(7558): 67-70 [DOI:10.1038/nature14576]

-

Barron J T. 2015. Convolutional color constancy//Proceedings of 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE: 379-387[DOI: 10.1109/ICCV.2015.51]

-

Barron J T and Tsai Y T. 2017. Fast fourier color constancy//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE: 886-894[DOI: 10.1109/CVPR.2017.735]

-

Bartels J R, Wang J, Whittaker W and Narasimhan S G. 2019. Agile depth sensing using triangulation light curtains//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea(South): IEEE: 7899-7907[DOI: 10.1109/ICCV.2019.00799]

-

Bodkin A, Sheinis A, Norton A, Daly J, Beaven S and Weinheimer J. 2009. Snapshot hyperspectral imaging: the hyperpixel array camera//Proceedings of SPIE 7334, Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XV. Orlando, USA: SPIE: #818929[DOI: 10.1117/12.818929]

-

Bok Y, Jeon H G, Kweon I S. 2017. Geometric calibration of micro-lens-based light field cameras using line features. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(2): 287-300 [DOI:10.1109/TPAMI.2016.2541145]

-

Boominathan V, Adams J K, Asif M S, Avants B W, Robinson J T, Baraniuk R G, Sankaranarayanan A C, Veeraraghavan A. 2016. Lensless imaging: a computational renaissance. IEEE Signal Processing Magazine, 33(5): 23-35 [DOI:10.1109/msp.2016.2581921]

-

Boominathan V, Adams J K, Robinson J T, Veeraraghavan A. 2020. PhlatCam: designed phase-mask based thin lensless camera. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(7): 1618-1629 [DOI:10.1109/tpami.2020.2987489]

-

Boominathan V, Robinson J T, Waller L, Veeraraghavan A. 2022. Recent advances in lensless imaging. Optica, 9(1): 1-16 [DOI:10.1364/optica.431361]

-

Brady D J and Gehm M E. 2006. Compressive imaging spectrometers using coded apertures//Proceedings of SPIE 6246, Visual Information Processing XV. Orlando, USA: SPIE: #667605[DOI: 10.1117/12.667605]

-

Brainard D H, Freeman W T. 1997. Bayesian color constancy. Journal of the Optical Society of America A, 14(7): 1393-1411 [DOI:10.1364/josaa.14.001393]

-

Brooks T, Mildenhall B, Xue T F, Chen J W, Sharlet D and Barron J T. 2019. Unprocessing images for learned raw denoising//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE: 11028-11037[DOI: 10.1109/CVPR.2019.01129]

-

Buchsbaum G. 1980. A spatial processor model for object colour perception. Journal of the Franklin Institute, 310(1): 1-26 [DOI:10.1016/0016-0032(80)90058-7]

-

Cadusch J J, Meng J J, Craig B, Crozier K B. 2019. Silicon microspectrometer chip based on nanostructured fishnet photodetectors with tailored responsivities and machine learning. Optica, 6(9): 1171-1177 [DOI:10.1364/optica.6.001171]

-

Cai Z W, Chen J W, Pedrini G, Osten W, Liu X L, Peng X. 2020. Lensless light-field imaging through diffuser encoding. Light: Science and Applications, 9(1): #143 [DOI:10.1038/s41377-020-00380-x]

-

Cao X, Lai K, Yanushkevich S N and Smith M R. 2020. Adversarial and adaptive tone mapping operator for high dynamic range images//Proceedings of 2020 IEEE Symposium Series on Computational Intelligence (SSCI). Canberra, Australia: IEEE: 1814-1821[DOI: 10.1109/SSCI47803.2020.9308535]

-

Cao X, Tong X, Dai Q H and Lin S. 2011. High resolution multispectral video capture with a hybrid camera system//Proceedings of the CVPR 2011. Colorado Springs, USA: IEEE: 297-304[DOI: 10.1109/CVPR.2011.5995418]

-

Cao X, Yue T, Lin X, Lin S, Yuan X, Dai Q H, Carin L, Brady D J. 2016. Computational snapshot multispectral cameras: Toward dynamic capture of the spectral world. IEEE Signal Processing Magazine, 33(5): 95-108 [DOI:10.1109/msp.2016.2582378]

-

Capasso F. 2018. The future and promise of flat optics: a personal perspective. Nanophotonics, 7(6): 953-957 [DOI:10.1515/nanoph-2018-0004]

-

Carlsohn M F, Kemmling A, Petersen A and Wietzke L. 2016.3D real-time visualization of blood flow in cerebral aneurysms by light field particle image velocimetry//Proceedings of SPIE 9897, Real-Time Image and Video Processing. Brussels, Belgium: SPIE: #2220417[DOI: 10.1117/12.2220417]

-

Chang M, Feng H J, Xu Z H, Li Q. 2018. Exposure correction and detail enhancement for single LDR image. Acta Photonica Sinica, 47(4): #0410003 (常猛, 冯华君, 徐之海, 李奇. 2018. 单张LDR图像的曝光校正与细节增强. 光子学报, 47(4): #0410003) [DOI:10.3788/gzxb20184704.0410003]

-

Chang J L, Sitzmann V, Dun X, Heidrich W, Wetzstein G. 2018. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Scientific Reports, 8(1): #12324 [DOI:10.1038/s41598-018-30619-y]

-

Chang J L and Wetzstein G. 2019. Deep optics for monocular depth estimation and 3D object detection//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea(South): IEEE: 10192-10201[DOI: 10.1109/ICCV.2019.01029]

-

Chen B, Pan B. 2018. Full-field surface 3D shape and displacement measurements using an unfocused plenoptic camera. Experimental Mechanics, 58(5): 831-845 [DOI:10.1007/s11340-018-0383-6]

-

Chen C, Chen Q F, Do M and Koltun V. 2019. Seeing motion in the dark//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea(South): IEEE: 3184-3193[DOI: 10.1109/ICCV.2019.00328]

-

Chen C, Chen Q F, Xu J and Koltun V. 2018a. Learning to see in the dark//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE: 3291-3300[DOI: 10.1109/CVPR.2018.00347]

-

Chen J W, Paris S, Durand F. 2007a. Real-time edge-aware image processing with the bilateral grid. ACM Transactions on Graphics, 26(3): #103 [DOI:10.1145/1276377.1276506]

-

Chen K, Feng H J, Xu Z H, Li Q and Chen Y T. 2015. Fast detail-preserving exposure fusion. Journal of Zhejiang University (Engineering Science), 49(6): 1048-1054 (陈阔, 冯华君, 徐之海, 徐之海, 李奇, 陈跃庭. 2015. 细节保持的快速曝光融合. 浙江大学学报(工学版), 49(6): 1048-1054[DOI: 10.3785/j.issn.1008-973X.2015.06.007])

-

Chen T B, Lensch H P A, Fuchs C and Seidel H P. 2007b. Polarization and phase-shifting for 3D scanning of translucent objects//Proceedings of 2007 IEEE Conference on Computer Vision and Pattern Recognition. Minneapolis, USA: IEEE: 1-8[DOI: 10.1109/CVPR.2007.383209]

-

Chen T B, Seidel H P and Lensch H P A. 2008. Modulated phase-shifting for 3D scanning//Proceedings of 2008 IEEE Conference on Computer Vision and Pattern Recognition. Anchorage, USA: IEEE: 1-8[DOI: 10.1109/CVPR.2008.4587836]

-

Chen W, Wang Q. 2020. Reconstruction method of HDR image based on convolutional neural network for LDR image. Packaging Engineering, 41(5): 228-234 (陈文, 王强. 2020. 基于卷积神经网络的LDR图像重建HDR图像的方法研究. 包装工程, 41(5): 228-234) [DOI:10.19554/j.cnki.1001-3563.2020.05.033]

-

Chen X Y, Liu Y H, Zhang Z W, Qiao Y and Dong C. 2021. HDRUNet: single image HDR reconstruction with denoising and dequantization//Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville, USA: IEEE: 354-363[DOI: 10.1109/CVPRW53098.2021.00045]

-

Chen Y Y, Jiang G Y, Shao H, Jiang H, Yu M. 2018. Noise suppression algorithm in the process of high dynamic range image fusion. Opto-Electronic Engineering, 45(7): #180083 (陈晔曜, 蒋刚毅, 邵华, 姜浩, 郁梅. 2018. 高动态范围图像融合过程中的噪声抑制算法. 光电工程, 45(7): #180083) [DOI:10.12086/oee.2018.180083]

-

Cheng D L, Prasad D K, Brown M S. 2014. Illuminant estimation for color constancy: why spatial-domain methods work and the role of the color distribution. Journal of the Optical Society of America A, 31(5): 1049-1058 [DOI:10.1364/josaa.31.001049]

-

Cheng Y, Cao J, Zhang Y K, Hao Q. 2019b. Review of state-of-the-art artificial compound eye imaging systems. Bioinspiration and Biomimetics, 14(3): #031002 [DOI:10.1088/1748-3190/aaffb5]

-

Cheng Z, Xiong Z W, Chen C and Liu D. 2019a. Light field super-resolution: a benchmark//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Long Beach, USA: IEEE: 1804-1813[DOI: 10.1109/CVPRW.2019.00231]

-

Choi S, Cho J, Song W, Choe J, Yoo J, Sohn K. 2020. Pyramid inter-attention for high dynamic range imaging. Sensors, 20(18): #5102 [DOI:10.3390/s20185102]

-

Content R, Blake S, Dunlop C, Nandi D, Sharples R, Talbot G, Shanks T, Donoghue D, Galiatsatos N, Luke P. 2013. New microslice technology for hyperspectral imaging. Remote Sensing, 5(3): 1204-1219 [DOI:10.3390/rs5031204]

-

Couture V, Martin N and Roy S. 2011. Unstructured light scanning to overcome interreflections//Proceedings of 2011 International Conference on Computer Vision. Barcelona, Spain: IEEE: 1895-1902[DOI: 10.1109/ICCV.2011.6126458]

-

Craig B, Shrestha V R, Meng J J, Cadusch J J, Crozier K B. 2018. Experimental demonstration of infrared spectral reconstruction using plasmonic metasurfaces. Optics Letters, 43(18): 4481-4484 [DOI:10.1364/OL.43.004481]

-

Dansereau D G, Pizarro O and Williams S B. 2013. Decoding, calibration and rectification for lenselet-based plenoptic cameras//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition. Portland, USA: IEEE: 1027-1034[DOI: 10.1109/CVPR.2013.137]

-

Debevec P E and Malik J. 1997. Recovering high dynamic range radiance maps from photographs//Proceedings of the 24th annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH '97). USA: ACM: 369-378[DOI: https://doi.org/10.1145/258734.258884]

-

Debevec P E and Malik J. 2008. Recovering high dynamic range radiance maps from photographs//Proceedings of the ACM SIGGRAPH 2008 Classes. Los Angeles, USA: ACM: #31[DOI: 10.1145/1401132.1401174]

-

DeWeert M J, Farm B P. 2015. Lensless coded-aperture imaging with separable Doubly-Toeplitz masks. Optical Engineering, 54(2): #023102 [DOI:10.1117/1.oe.54.2.023102]

-

Ding J F, Li H T, Ma H X, Shi S X, New T H. 2019. A novel light field imaging based 3D geometry measurement technique for turbomachinery blades. Measurement Science and Technology, 30(11): #115901 [DOI:10.1088/1361-6501/ab310b]

-

Ding J F, Lim H D, Sheikh S, Xu S M, Shi S X and New T H. 2018. Volumetric measurement of a supersonic jet with single-camera light-field PIV//The 19th International Symposium on the Application of Laser and Imaging Techniques to Fluid Mechanics. Lisbon, Porugal: [s. n. ]

-

Drago F, Myszkowski K, Annen T, Chiba N. 2003. Adaptive logarithmic mapping for displaying high contrast scenes. Computer Graphics Forum, 22(3): 419-426 [DOI:10.1111/1467-8659.00689]

-

Du L, Sun H Y, Wang S, Gao Y X, Qi Y Y. 2017. High dynamic range image fusion algorithm for moving targets. Acta Optica Sinica, 37(4): 101-109 (都琳, 孙华燕, 王帅, 高宇轩, 齐莹莹. 2017. 针对动态目标的高动态范围图像融合算法研究. 光学学报, 37(4): 101-109) [DOI:10.3788/AOS201737.0410001]

-

Dun X, Ikoma H, Wetzstein G, Wang Z, Cheng X, Peng Y. 2020. Learned rotationally symmetric diffractive achromat for full-spectrum computational imaging. Optica, 7(8): 913-922 [DOI:10.1364/OPTICA.394413]

-

Durand F and Dorsey J. 2000. Interactive tone mapping//Proceedings of the Eurographics Workshop on Rendering Techniques. Czech Republic: Springer: 219-230[DOI: 10.1007/978-3-7091-6303-0_20]

-

Durand F and Dorsey J. 2002. Fast bilateral filtering for the display of high-dynamic-range images//Proceedings of the 29th annual Conference on Computer Graphics and Interactive Techniques. San Antonio, USA: ACM: 257-266[DOI: 10.1145/566570.566574]

-

Dwight J G, Tkaczyk T S. 2017. Lenslet array tunable snapshot imaging spectrometer (LATIS) for hyperspectral fluorescence microscopy. Biomedical Optics Express, 8(3): 1950-1964 [DOI:10.1364/boe.8.001950]

-

Egiazarian K and Katkovnik V. 2015. Single image super-resolution via BM3D sparse coding//Proceedings of the 23rd European Signal Processing Conference. Nice, France: IEEE: 2849-2853[DOI: 10.1109/EUSIPCO.2015.7362905]

-

Eigen D, Puhrsch C and Fergus R. 2014. Depth map prediction from a single image using a multi-scale deep network//Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press: 2366-2374

-

Eilertsen G, Kronander J, Denes G, Mantiuk R K, Unger J. 2017. HDR image reconstruction from a single exposure using deep CNNs. ACM Transactions on Graphics, 36(6): #178 [DOI:10.1145/3130800.3130816]

-

Eisemann E, Durand F. 2004. Flash photography enhancement via intrinsic relighting. ACM Transactions on Graphics, 23(3): 673-678 [DOI:10.1145/1015706.1015778]

-

Endo Y, Kanamori Y, Mitani J. 2017. Deep reverse tone mapping. ACM Transactions on Graphics, 36(6): #177 [DOI:10.1145/3130800.3130834]

-

Erdozain J, Ichimaru K, Maeda T, Kawasaki H, Raskar R and Kadambi A. 2020.3d imaging for thermal cameras using structured light//Proceedings of 2020 IEEE International Conference on Image Processing. Abu Dhabi, United Arab Emirates: IEEE: 2795-2799[DOI: 10.1109/ICIP40778.2020.9191297]

-

Fahringer T W, Lynch K P, Thurow B S. 2015. Volumetric particle image velocimetry with a single plenoptic camera. Measurement Science and Technology, 26(11): #115201 [DOI:10.1088/0957-0233/26/11/115201]

-

Fahringer T W, Thurow B S. 2016. Filtered refocusing: a volumetric reconstruction algorithm for plenoptic-PIV. Measurement Science and Technology, 27(9): #094005 [DOI:10.1088/0957-0233/27/9/094005]

-

Fahringer T W, Thurow B S. 2018. Plenoptic particle image velocimetry with multiple plenoptic cameras. Measurement Science and Technology, 29(7): #075202 [DOI:10.1088/1361-6501/aabe1d]

-

Feng W, Liu H D, Wu G M, Zhao D X. 2020. Gradient domain adaptive tone mapping algorithm based on color correction model. Laser and Optoelectronics Progress, 57(8): #081007 (冯维, 刘红帝, 吴贵铭, 赵大兴. 2020. 基于颜色校正模型的梯度域自适应色调映射算法. 激光与光电子学进展, 57(8): #081007) [DOI:10.3788/LOP57.081007]

-

Feng W, Zhang F M, Qu X H, Zheng S W. 2016. Per-pixel coded exposure for high-speed and high-resolution imaging using a digital micromirror device camera. Sensors, 16(3): #331 [DOI:10.3390/s16030331]

-

Feng W, Zhang F M, Wang W J, Qu X H. 2017. Adaptive high-dynamic-range imaging method and its application based on digital micromirror device. Acta Physica Sinica, 66(23): #234201 (冯维, 张福民, 王惟婧, 曲兴华. 2017. 基于数字微镜器件的自适应高动态范围成像方法及应用. 物理学报, 66(23): #234201)

-

Feng W, Zhang F M, Wang W J, Xing W, Qu X H. 2017. Digital micromirror device camera with per-pixel coded exposure for high dynamic range imaging. Applied Optics, 56(13): 3831-3840 [DOI:10.1364/AO.56.003831]

-

Finlayson G D, Hordley S D, Tastl I. 2006. Gamut constrained illuminant estimation. International Journal of Computer Vision, 67(1): 93-109 [DOI:10.1007/s11263-006-4100-z]

-

Finlayson G D and Trezzi E. 2004. Shades of gray and colour constancy//Proceedings of the 12th Color and Imaging Conference Final Program and Proceedings. [s. l. ]: Society for Imaging Science and Technology: 37-41

-

Frank S A. 2018. A biochemical logarithmic sensor with broad dynamic range. F1000Research, 7: #200 [DOI:10.12688/f1000research.14016.3]

-

French R, Gigan S, Muskens O L. 2017. Speckle-based hyperspectral imaging combining multiple scattering and compressive sensing in nanowire mats. Optics Letters, 42(9): 1820-1823 [DOI:10.1364/ol.42.001820]

-

Fu Q, Yan D M and Heidrich W. 2021. Compound eye inspired flat lensless imaging with spatially-coded Voronoi-Fresnel phase[EB/OL]. [2021-12-15]. https://arxiv.org/pdf/2109.13703.pdf

-

Fu Y, Zhang T, Zheng Y Q, Zhang D B, Huang H. 2022. Joint camera spectral response selection and hyperspectral image recovery. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(1): 256-272 [DOI:10.1109/TPAMI.2020.3009999]

-

Gallo O, Gelfandz N, Chen W C, Tico M and Pulli K. 2009. Artifact-free high dynamic range imaging//Proceedings of 2009 IEEE International Conference on Computational Photography. San Francisco, USA: IEEE: 1-7[DOI: 10.1109/ICCPHOT.2009.5559003]

-

Gao L, Kester R T, Hagen N, Tkaczyk T S. 2010. Snapshot Image Mapping Spectrometer (IMS) with high sampling density for hyperspectral microscopy. Optics Express, 18(14): 14330-14344 [DOI:10.1364/oe.18.014330]

-

Gabor D. 1948. A New Microscopic Principle. Nature, 161: 777-778 [DOI:10.1038/161777a0]

-

Gat N, Scriven G, Garman J, Li M D and Zhang J Y. 2006. Development of four-dimensional imaging spectrometers (4D-IS)//Proceedings of SPIE 6302, Imaging Spectrometry XI. San Diego, USA: SPIE: #678082[DOI: 10.1117/12.678082]

-

Geelen B, Jayapala M, Tack N and Lambrechts A. 2013. Low-complexity image processing for a high-throughput low-latency snapshot multispectral imager with integrated tiled filters//Proceedings of SPIE 8743, Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XIX. Baltimore, USA: SPIE: #2015248[DOI: 10.1117/12.2015248]

-

Geelen B, Tack N and Lambrechts A. 2014. A compact snapshot multispectral imager with a monolithically integrated per-pixel filter mosaic//Proceedings of SPIE 8974, Advanced Fabrication Technologies for Micro/Nano Optics and Photonics VⅡ. San Francisco, USA: SPIE: 2037607[DOI: 10.1117/12.2037607]

-

Gehler P V, Rother C, Blake A, Minka T and Sharp T. 2008. Bayesian color constancy revisited//Proceedings of 2008 IEEE Conference on Computer Vision and Pattern Recognition. Anchorage, USA: IEEE: 1-8[DOI: 10.1109/CVPR.2008.4587765]

-

Georgiev T, Chunev G and Lumsdaine A. 2011. Superresolution with the focused plenoptic camera//Proceedings of SPIE 7873, Computational Imaging IX. San Francisco, USA: SPIE: #78730X[DOI: 10.1117/12.872666]

-

Gershun A. 1939. The light field. Journal of Mathematics and Physics, 18(1/4): 51-151 [DOI:10.1002/sapm193918151]

-

Gharbi M, Chen J W, Barron J T, Hasinoff S W, Durand F. 2017. Deep bilateral learning for real-time image enhancement. ACM Transactions on Graphics, 36(4): #118 [DOI:10.1145/3072959.3073592]

-

Gill P R. 2013. Odd-symmetry phase gratings produce optical nulls uniquely insensitive to wavelength and depth. Optics Letters, 38(12): 2074-2076 [DOI:10.1364/ol.38.002074]

-

Gluskin M G. 2020. Quad color filter array camera sensor configurations. U.S., No. 16/715, 570

-

Gnanasambandam A, Chan S H. 2020. HDR imaging with quanta image sensors: theoretical limits and optimal reconstruction. IEEE Transactions on Computational Imaging, 6: 1571-1585 [DOI:10.1109/tci.2020.3041093]

-

Godard C, Matzen K and Uyttendaele M. 2018. Deep burst denoising//Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer: 560-577[DOI: 10.1007/978-3-030-01267-0_33]

-

Golub M A, Averbuch A, Nathan M, Zheludev V A, Hauser J, Gurevitch S, Malinsky R, Kagan A. 2016. Compressed sensing snapshot spectral imaging by a regular digital camera with an added optical diffuser. Applied Optics, 55(3): 432-443 [DOI:10.1364/ao.55.000432]

-

Goshtasby A A. 2005. Fusion of multi-exposure images. Image and Vision Computing, 23(6): 611-618[DOI: 10.1.1.103.2389]

-

Granados M, Kim K I, Tompkin J, Theobalt C. 2013. Automatic noise modeling for ghost-free HDR reconstruction. ACM Transactions on Graphics, 32(6): #201 [DOI:10.1145/2508363.2508410]

-

Grossberg M D, and Nayar S K. 2003. October. High dynamic range from multiple images: Which exposures to combine//Proceedings of ICCV Workshop on Color and Photometric Methods in Computer Vision (CPMCV). Nice, France: CPMCV: #3

-

Gu B, Li W J, Wong J T, Zhu M Y, Wang M H. 2012. Gradient field multi-exposure images fusion for high dynamic range image visualization. Journal of Visual Communication and Image Representation, 23(4): 604-610 [DOI:10.1016/j.jvcir.2012.02.009]

-

Gu J W, Kobayashi T, Gupta M and Nayar S K. 2011. Multiplexed illumination for scene recovery in the presence of global illumination//Proceedings of 2011 International Conference on Computer Vision. Barcelona, Spain: IEEE: 691-698[DOI: 10.1109/ICCV.2011.6126305]

-

Guan X M, Qu X H, Niu B, Zhang Y J, Zhang F M. 2021. Pixel-level mapping method in high dynamic range imaging system based on DMD modulation. Optics Communications, 499: #127278 [DOI:10.1016/j.optcom.2021.127278]

-

Guo X J, Li Y, Ma J Y, Ling H B. 2020. Mutually guided image filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(3): 694-707 [DOI:10.1109/tpami.2018.2883553]

-

Gupta A, Ingle A and Gupta M. 2019b. Asynchronous single-photon 3D imaging//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea(South): IEEE: 7908-7917[DOI: 10.1109/ICCV.2019.00800]

-

Gupta A, Ingle A, Velten A and Gupta M. 2019a. Photon-flooded single-photon 3D cameras//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE: 6763-6772[DOI: 10.1109/CVPR.2019.00693]

-

Gupta M, Agrawal A, Veeraraghavan A and Narasimhan S G. 2011. Structured light 3D scanning in the presence of global illumination//Proceedings of the CVPR 2011. Colorado Springs, USA: IEEE: 713-720[DOI: 10.1109/CVPR.2011.5995321]

-

Gupta M and Nayar S K. 2012. Micro phase shifting//Proceedings of 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE: 813-820[DOI: 10.1109/CVPR.2012.6247753]

-

Gupta M, Nayar S K, Hullin M B, Martin J. 2015. Phasor imaging: a generalization of correlation-based time-of-flight imaging. ACM Transactions on Graphics, 34(5): #156 [DOI:10.1145/2735702]

-

Gupta M, Yin Q and Nayar S K. 2013. Structured light in sunlight//Proceedings of 2013 IEEE International Conference on Computer Vision. Sydney, Australia: IEEE: 545-552[DOI: 10.1109/ICCV.2013.73]

-

Haim H, Elmalem S, Giryes R, Bronstein A M, Marom E. 2018. Depth estimation from a single image using deep learned phase coded mask. IEEE Transactions on Computational Imaging, 4(3): 298-310 [DOI:10.1109/tci.2018.2849326]

-

Halé A, Trouvé-Peloux P., Volatier J B. 2021. End-to-end sensor and neural network design using differential ray tracing. Optics Express, 29(21): 34748-34761 [DOI:10.1364/OE.439571]

-

Hall E M, Fahringer T W, Guildenbecher D R, Thurow B S. 2018. Volumetric calibration of a plenoptic camera. Applied Optics, 57(4): 914-923 [DOI:10.1364/AO.57.000914]

-

Han J, Zhou C, Duan P Q, Tang Y H, Xu C, Xu C, Huang T J and Shi B X. 2020. Neuromorphic camera guided high dynamic range imaging//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE: 1727-1736[DOI: 10.1109/CVPR42600.2020.00180]

-

Hanwell D, Alassane S E C K and Kornienko A. 2020. Spatially multiplexed exposure. U.S., 16150884

-

Hasinoff S W, Durand F and Freeman W T. 2010. Noise-optimal capture for high dynamic range photography//Proceedings of 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Francisco, USA: IEEE: 553-560[DOI: 10.1109/CVPR.2010.5540167]

-

Hasinoff S W, Sharlet D, Geiss R, Adams A, Barron J T, Kainz F, Chen J W, Levoy M. 2016. Burst photography for high dynamic range and low-light imaging on mobile cameras. ACM Transactions on Graphics, 35(6): #192 [DOI:10.1145/2980179.2980254]

-

He L, Chen G, Guo H, Jin W Q. 2020. A dynamic scene HDR fusion method based on dual-channel low-light-level CMOS camera. Infrared Technology, 42(4): 340-347 (贺理, 陈果, 郭宏, 金伟其. 2020. 一种基于双通道CMOS相机的低照度动态场景HDR融合方法. 红外技术, 42(4): 340-347)

-

He S W. 2015. Study of Pixel-Level Light Adjusting Technology Based on DMD. Changchun: Changchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of Sciences (何舒文. 2015. 基于数字微镜的像素级调光技术研究. 长春: 中国科学院研究生院(长春光学精密机械与物理研究所))

-

Heber S and Pock T. 2016. Convolutional networks for shape from light field//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE: 3746-3754[DOI: 10.1109/CVPR.2016.407]

-

Heber S, Yu W and Pock T. 2017. Neural EPI-volume networks for shape from light field//Proceedings of 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE: 2271-2279[DOI: 10.1109/ICCV.2017.247]

-

Heide F, Steinberger M, Tsai Y T, Rouf M, Pająk D, Reddy D, Gallo O, Liu J, Heidrich W, Egiazarian K, Kautz J and Pulli K. 2014. FlexISP: a flexible camera image processing framework ACM Transactions on Graphics, 33(6): #231[DOI: 10.1145/2661229.2661260]

-

Hou X L, Zhang J C, Zhou P P. 2021. Reconstructing a high dynamic range image with a deeply unsupervised fusion model. IEEE Photonics Journal, 13(2): #3900210 [DOI:10.1109/JPHOT.2021.3058740]

-

Hou X, Duan J and Qiu G. 2017. Deep feature consistent deep image transformations: Downscaling, decolorization and HDR tone mapping[EB/OL]. [2021-12-15]. https://arxiv.org/pdf/1707.09482.pdf

-

Hu J, Gallo O, Pulli K and Sun X B. 2013. HDR deghosting: how to deal with saturation?//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition. Portland, USA: IEEE: 1163-1170[DOI: 10.1109/CVPR.2013.154]

-

Hu Y M, Wang B Y and Lin S. 2017. FC4: fully convolutional color constancy with confidence-weighted pooling//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE: 330-339[DOI: 10.1109/CVPR.2017.43]

-

Hu Y X, Wan L. 2014. Multi exposure image fusion based on dynamic range extending. Computer Engineering and Application, 50(1): 153-155, 214 (胡燕翔, 万莉. 2014. 大动态范围多曝光图像融合方法. 计算机工程与应用, 50(1): 153-155, 214) [DOI:10.3778/j.issn.1002-8331.1203-0003]

-

Huang E, Ma Q, Liu Z W. 2017. Etalon array reconstructive spectrometry. Scientific Reports, 7(1): #40693 [DOI:10.1038/srep40693]

-

Huang F, Zhou D M, Nie R C, Yu C B. 2018a. A color multi-exposure image fusion approach using structural patch decomposition. IEEE Access, 6: 42877-42885 [DOI:10.1109/access.2018.2859355]

-

Huang Q, Kim H Y, Tsai W J, Jeong S Y, Choi J S, Kuo C C J. 2018b. Understanding and removal of false contour in HEVC compressed images. IEEE Transactions on Circuits and Systems for Video Technology, 28(2): 378-391 [DOI:10.1109/TCSVT.2016.2607258]

-

Hubold M, Berlich R, Gassner C, Brüning R and Brunner R. 2018. Ultra-compact micro-optical system for multispectral imaging//Proceedings of SPIE 10545, MOEMS and Miniaturized Systems XVⅡ. San Francisco, USA: SPIE: #2295343[DOI: 10.1117/12.2295343]

-

Huynh T T, Nguyen T D, Vo M T and Dao S V T. 2019. High dynamic range imaging using a 2×2 camera array with polarizing filters//Proceedings of the 19th International Symposium on Communications and Information Technologies. Ho Chi Minh City, Vietnam: IEEE: 183-187[DOI: 10.1109/ISCIT.2019.8905122]

-

Ignatov A, Patel J and Timofte R. 2020. Rendering natural camera bokeh effect with deep learning//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Seattle, USA: IEEE: 1676-1686[DOI: 10.1109/CVPRW50498.2020.00217]

-

Jeon D S, Baek S H, Yi S, Fu Q, Dun X, Heidrich W, Kim M H. 2019b. Compact snapshot hyperspectral imaging with diffracted rotation. ACM Transactions on Graphics, 38(4): #117 [DOI:10.1145/3306346.3322946]

-

Jeon H G, Park J, Choe G, Park J, Bok Y, Tai Y W and Kweon I S. 2015. Accurate depth map estimation from a lenslet light field camera//Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE: 1547-1555[DOI: 10.1109/CVPR.2015.7298762]

-

Jeon H G, Park J, Choe G, Park J, Bok Y, Tai Y W, Kweon I S. 2019a. Depth from a light field image with learning-based matching costs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2): 297-310 [DOI:10.1109/TPAMI.2018.2794979]

-

Jiang H Y and Zheng Y Q. 2019. Learning to see moving objects in the dark//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea(South): IEEE: 7323-7332[DOI: 10.1109/ICCV.2019.00742]

-

Jiang S Y, Chen K, Xu Z H, Feng H J, Li Q, Chen Y T. 2015. Multi-exposure image fusion based on well-exposedness assessment. Journal of Zhejiang University (Engineering Science), 49(3): 470-475, 481 (江燊煜, 陈阔, 徐之海, 冯华君, 李奇, 陈跃庭. 2015. 基于曝光适度评价的多曝光图像融合方法. 浙江大学学报(工学版), 49(3): 470-475, 481) [DOI:10.3785/j.issn.1008-973X.2015.03.011]

-

Jiang Y T, Choi I, Jiang J and Gu J W. 2021. HDR video reconstruction with tri-exposure quad-bayer sensors[EB/OL]. [2021-12-15]. https://arxiv.org/pdf/2103.10982.pdf

-

Johannsen O, Honauer K, Goldluecke B, Alperovich A, Battisti F, Bok Y, Brizzi M, Carli M, Choe G, Diebold M, Gutsche M, Jeon H G, Kweon I S, Park J, Park J, Schilling H, Sheng H, Si L P, Strecke M, Sulc A, Tai Y W, Wang Q, Wang T C, Wanner S, Xiong Z, Yu J Y, Zhang S and Zhu H. 2017. A taxonomy and evaluation of dense light field depth estimation algorithms//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Honolulu, USA: IEEE: 1795-1812[DOI: 10.1109/CVPRW.2017.226]

-

Johannsen O, Sulc A and Goldluecke B. 2016. What sparse light field coding reveals about scene structure//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE: 3262-3270[DOI: 10.1109/CVPR.2016.355]

-

Kalantari N K, Ramamoorthi R. 2017. Deep high dynamic range imaging of dynamic scenes. ACM Transactions on Graphics, 36(4): #144 [DOI:10.1145/3072959.3073609]

-

Kalantari N K, Shechtman E, Barnes C, Darabi S, Goldman D B, Sen P. 2013. Patch-based high dynamic range video. ACM Transactions on Graphics, 32(6): #202 [DOI:10.1145/2508363.2508402]

-

Kalantari N K, Ramamoorthi R. 2019. Deep HDR video from sequences with alternating exposures. Computer Graphics Forum, 38(2): 193-205 [DOI:10.1111/cgf.13630]

-

Kang S B, Uyttendaele M, Winder S, Szeliski R. 2003. High dynamic range video. ACM Transactions on Graphics (TOG), 22(3): 319-325 [DOI:10.1145/882262.882270]

-

Kavadias S, Dierickx B, Scheffer D, Alaerts A, Uwaerts D, Bogaerts J. 2000. A logarithmic response CMOS image sensor with on-chip calibration. IEEE Journal of Solid-State Circuits, 35(8): 1146-1152 [DOI:10.1109/4.859503]

-

Kaya B, Can Y B and Timofte R. 2019. Towards spectral estimation from a single RGB image in the wild//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision Workshop. Seoul, Korea(South): IEEE: 3546-3555[DOI: 10.1109/ICCVW.2019.00439]

-